How should scientists use statistical methods to do practical data analysis? There are differing schools of thought, and in the main division between them I am a Bayesian rather than a frequentist. Unfortunately the word ‘Bayesian’ is itself in dispute, for frequentists often construct things called estimators, and misleadingly speak of ‘Bayesian’ ways to do that.

THE WAY FORWARD.

I consider myself an ‘objective Bayesian.’ Even of that term there is contention, so I shall outline my view using undisputed terminology. Consider a number that represents how strongly one binary (true or false) proposition would imply another, according to the relations known between their referents. (For example, if I know that a bag contains one pool ball of each color, I can consider whether the next ball pulled out of the bag is red.) Binary propositions obey rules for combining them, known as Boolean algebra and familiar in computer logic. This algebra of the propositions constrains the numbers attached to the propositions to obey the so-called sum and product rules, the ‘laws of probability.’ They were derived from Boolean algebra in 1946 by R.T. Cox (although known much earlier). Reasoning about propositions under uncertainty is known as inductive logic; Cox’s analysis shows that is a widening of deductive logic. The degree of implication is what you need in any problem involving uncertainty. (How strongly do the data imply that a parameter takes a certain value?) Since this quantity obeys ‘the laws of probability’ it is natural to call it probability, but where other schools dispute the meaning of the word it is best to get on with calculating degrees of implication, as the debate is sterile. Consistency with Boolean logic and universal applicability are the highest criteria we can appeal to.

AVOIDING CONFUSION.

Since this view is based on how strongly one proposition implies another, it does not recognize ‘unconditional’ probabilities that any proposition is true. Also, the messy issue of whether probability should be regarded as ‘degree of belief’ does not intrude. In continuum problems, densities are defined via propositions such as “the parameter is in the range x and x+dx”.

GETTING STARTED.

The two laws of probability imply Bayes’ theorem, which states how to revise the degree of implication of a proposition of interest whenever we take further information into account by putting it into the ‘implying’ proposition. But where do we begin? Further principles give the answer; the situation is not anarchy. One principle is symmetry; if you have no information about the location of a bead on a flat loop of wire (for example) then every location must be equally likely – a uniform probability density. Bayesians are still learning how to assign probabilities from information of subtle relevance. If this is done prior to the use of Bayes’ theorem to incorporate data obtained by design, these are called prior probabilities, assigned from ‘prior information.’

Bayesians are still learning how to assign probabilities from information of subtle relevance. If this is done prior to the use of Bayes’ theorem to incorporate data obtained by design, these are called prior probabilities, assigned from ‘prior information.’

RIGHT AND WRONG WAYS.

That Bayesian methods take prior information into account is a vital feature, not a defect. To see this, suppose that before measuring a parameter you have information that its value is definitely not in some region of parameter space. (In particular, you might be certain of its value, so that it cannot fall anywhere else; you are measuring it only to convince someone else.) Your prior density for it is zero in that region. Bayes’ theorem gives the posterior density by multiplying the prior by the likelihood for the data, and re-normalising the result. So the posterior is also zero in that region, as intuition demands. Frequentist methods (known as sampling theory) nevertheless generally assign non-zero probability in that ‘forbidden’ region! Frequentist methods were devised ad hoc for use in particular problems, and they are now deployed in much off-the-shelf statistical software. Their answers in their problems of origin are often close to the Bayesian result (a tribute to their inventors), but all such deviations are problematic. Worryingly, sampling theory is commonly applied in drug testing and industrial quality control (e.g., aircraft construction). Bayesian software is superior, and is also applicable to further questions such as: given a blurred image of an object, what is it most likely to look like?

RANDOMNESS?

Bayesian methodology is as applicable to one-off situations, such as image reconstruction or estimating the mass of the universe, as it is to repeated trials. (A repeated trial is a one-off event in a large enough space.) In coin tossing and other repeated trials, the value of a degree of implication is often numerically equal to the relative frequency (proportion) of the corresponding outcome, but the concepts are distinct. The Bayesian view downplays the word ‘random,’ for when people speak of a ‘random process’ (perhaps yielding a ‘random number’) they mean a process which they believe nobody can work out how to predict. But that statement is as much about human ingenuity as about the process itself. Computers were banned from casinos…

INFERENCE AND TIME.

If information becomes available, Bayes’ theorem can be applied to make better inference about the future (i.e., prediction) or about the past (reconstructing history). In applications such as robotics, incoming information often comprises a real-time datastream.

MAKING INFORMED CHOICES.

Calculating probabilities is part of thinking; but how then to act in the light of the result? To decide what to do, a robot requires further software. This is decision theory, which combines probabilities of the various conceivable outcomes with the payoffs or penalties attached to them (as in the placing of bets). Probability theory must be deployed before decision theory, because you can’t decide what to do until you know what to believe.

Probability theory must be deployed before decision theory, because you can’t decide what to do until you know what to believe.

HOW UNCERTAINTY BECAME MATHEMATICAL.

Probability was quantified several centuries ago. The ideas that it puts into mathematics had previously been developed mainly in the law, because law implicitly involves the probability that someone is guilty, given the evidence. The earliest problems treated mathematically were from gambling, as these problems were beyond intuition but simple enough to be tackled by the pioneers.

ANOTHER SUMMARY

It’s easier done Bayesian – the harder part is unpicking what you’ve been taught that’s wrong.

Forget ‘probability’ for a moment and consider a number p(A|B) that represents how strongly B implies A, where A and B are binary propositions (true or false) – a measure of how strongly A is implied to be true upon supposing that B is true, according to relations known between their referents.

Degree of implication is actually what you want in every problem involving uncertainty.

From the Boolean algebra obeyed by propositions it is possible to derive algebraic relations for the degrees of implication of the propositions. These relations turn out to be the sum and product rules – the ‘laws of probability.’

So let’s call degree of implication ‘probability’.

But if frequentists (or anybody else) object to this naming then bypass them. Simply calculate the degree of implication in each problem, because it’s what you want in order to solve it, and call this quantity whatever name you like. The concept is what matters, not its name. In defining a theory of probability there are no higher criteria than consistency and universality.

In this viewpoint there are no worries over ‘belief’ or imaginary ensembles, and all probabilities are automatically conditional. The confusing word ‘random’ is downplayed.

The two laws of probability – long known – were first derived from Boolean algebra by RT Cox in 1946. (Cox did not use the rhetorical device advocated here about the name of the quantity.) Kevin Knuth has since done this better. The key aspect of Boolean algebra is associativity, which generates a functional equation whose solution is the product rule.

So we use Boolean algebra to manipulate the propositions, and then use the sum and product rules to relate their probabilities. Many books have the word ‘Bayesian’ in the title but fail to understand that the arguments of probabilities are binary propositions. You can check a book quickly by seeing if RT Cox is prominent in the index. Two books that get it right are Bayesian Logical Data Analysis for the Physical Sciences by Phil Gregory and Data Analysis: A Bayesian Tutorial by Devinder Sivia.

Bayes’ theorem follows immediately from the sum and product rules. It shows how to incorporate new information (phrased propositionally) into a probability. You want to do this whenever you get experimental data relating to a question of interest. But how to assign the probability that you update, before the experiment? This is the issue of ‘prior probabilities.’ Sampling theorists/frequentists have used it as a stick to beat Bayesians with, but the neglect of prior information is actually a weakness of their own methods, not a strength. (In some problems, your prior information tells you that a particular parameter cannot take certain values, yet sampling-theoretical methods still generally assign nonzero probability to those values.) Today we don’t know how to encode (prior) information of arbitrary type, but that is a matter for research, not castigation, and only Bayesians are looking. (When you have decent data it scarcely matters in practice.) Symmetry is one important principle: in the elementary case of the probability for the location of a bead on a horizontal circle of wire and no further information, the probability has rotational invariance with respect to angle around the wire, measured from its center. It is therefore uniform in angle.

YET ANOTHER SUMMARY

Logic is about propositions that are either true or false, known therefore as binary propositions. The challenge is to work out whether a proposition of interest is true or false when you know that certain other propositions, related to the one of interest, are true or false.

Often this is possible. Sometimes, though, the propositions you know don’t give you enough information to decide whether the proposition of interest is true or false – even though they are clearly relevant.

What then? If you restrict yourself to strict deductive logic, you can say nothing more; you want to know if the proposition of interest is true or false and you can’t – end of story. But the very relevance of the propositions you do know about suggests that we can do better. If we admit the concept of how strongly the truth of one proposition implies the truth of another then we can go further. This quantity is on a scale from 0 (what you know implies the proposition is definitely false) to 1 (what you know implies the proposition is definitely true). Of course these extreme cases correspond to deductive logic, but now there is a scale in between.

In 1946 it was shown that this quantity obeys two well known mathematical rules, known as the laws of probability. On these grounds it would be good to call this quantity the probability of the proposition being true, but unfortunately there is longstanding argument about the meaning of the word ‘probability.’ Suffice it to say that the quantity I have just defined is what you actually need in every real problem, and it obeys “the laws of probability.”

I also assert that inductive logic, done correctly, is probability theory when the latter is understood in this way. Unfortunately there is a great deal of confusion, both terminological and logical.

David Hume rejected inductive logic, ultimately because it can’t provide certainty. Of course it can’t – its whole point is to do the best you can when you are short of the information needed for certainty.

When you want the best answer to a question in the face of uncertainty, this is how to proceed. If, for example, you wish to test a scientific theory (i.e., is it true or false?) then you do experiments to get data – which invariably contain uncertainties such as noise. (The value of a quantity can be decomposed into binary propositions, such as “the dice shows face 6” and similarly for other faces.) The data shift the probability for that theory. The hard part in this situation is thinking up theories in the first place; it is routine to test new scientific theories but extremely difficult to think of them, as they have to be consistent with the results of all previous experiments yet predict differently from prevailing theories in unexplored situations.

More From Anton Garrett

Intersections Of Probability, Philosophy, And Physics

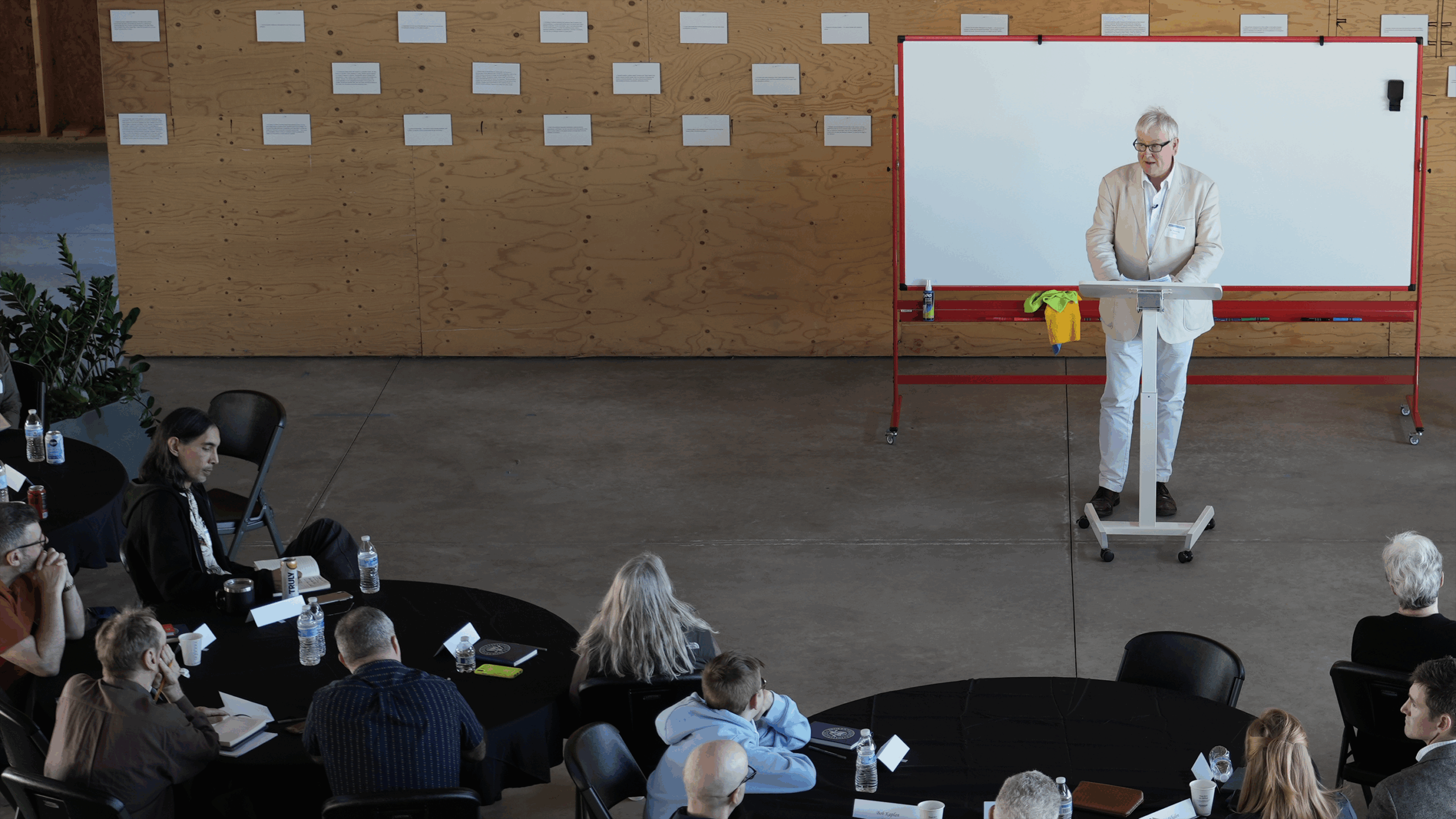

At the 2024 BSI Epistemology Camp Anthony Garrett delivers a comprehensive lecture, touching on several major themes centered around the principles of probability, scientific methods, and the philosophical underpinnings of science.

Watch

Anthony Garrett has a PhD in physics (Cambridge University, 1984) and has held postdoctoral research contracts in the physics departments of Cambridge, Sydney and Glasgow Universities. He is Managing Editor of Scitext Cambridge (www.scitext.com), an editing service for scientific documents.

Support the Broken Science Initiative.

Subscribe today →

recent posts

Q&A session with MetFix Head of Education Pete Shaw and Academy staff Karl Steadman