Transcript

Speaker1: [00:00:00] Two of my speakers are on this crisis subject. My third is a veteran of these wars and I had him here to talk about something non dire. And because he’s he’s been fighting this fight. Dr. Noakes paved the way for us on on hydration. We got to fix that mess only because of the of all the work you did in that space. And thank you for that. That was that was really exciting and a wonderful thing to really make a contribution and fix some broken science that was broken by soda pop. But Geoff’s piece is really about the innumeracy of post-modern science. And and that isn’t by necessity, fraud. It’s being done is is a good chunk of it. And. And Dr. FITC. He his his. He both. Dr. FITC he and. And and Dr. Noakes have are in fights for their medical license for telling the truth about carbohydrate. Fighting for their medical has a formal legal challenge in the governing boards, right? That’s fair to say. It’s trying to take their their license from them. And I see this is part of the attempt to shut us up, to stop us. And but the but the what what we’ve got here is fascism. And that words used too much, and we use with a lot of hyperbole. And so I it it stuck in my chron. I looked it up again today, and it fits absolutely perfectly. What’s happening? The postmodern science. It’s innumerate and it’s fascist. And it has no problem line. Nothing. Dad. Dr. Glassman, come on up.

Speaker2: [00:02:03] Any questions? Greg gave me about 15 hooks into my talk. It’s hard to say where I should begin. Well, first thing I learned today that our hostess for this, her name is pronounced Karen. And I appreciate that. At least in her home country, she said she answers to Karen as well. Greg mentioned the divorce study. I reviewed that for him. He also mentioned the Champ paper. I reviewed that for him, too. In fact, you may have seen the gigantic poster that’s been made of my response to the Champ paper, and it hangs in a whole bunch of boxes around the country, I guess. I have one at home, but no place to put it. I can’t even unfurl it in the house. My talk is about the crisis in science and. Let’s see if I can make this thing work. And that crisis occurred January of last year when the American Statistical Association published a position paper that in fact nullifies the null hypothesis and its use, as has been used in so much of the science that Greg’s been talking about. It’s the the event is of such high significance, in part because the controversy has raged over the use of that null hypothesis to produce statistical significance and p values since it was first released back in about 1934. It’s one of a cascade of events that have shaped where we are in science and and where we are in statistics. And the fact that science and statistics have taken two separate paths to the to the present day.

Speaker2: [00:04:32] And it remains to be seen whether the assays. Prohibition was too strong a word, but disapproval of the P value actually turns into anything. And I want to trace out for you where the definitions of science arose and where the statistics arose. And it covers 2500 years, and I’m going to do it in the 45 minutes, and we’re not going to look at view graphs for each one of these events. But the outline of my talk is in this is in this cascade of major events that lead up to the present. He. This is a this chart contains a couple of quotations from Wasserstein, who is on the board of the American Statistical Association and maybe the most senior man on it. He was the lead man of the team that wrote the Asus’s position paper. And this issue of reproducibility that Greg talked about is the feature concern of the American Statistical Association. It comes on the heels of two or three professional journals that have banned the use of P value if you want to get published in their journals. So I’m going to I’m going to get right down to the specifics of what Azar said. See if I can click on the right. Okay. The very first bullet on here says these are actual quotes from the assay paper on its disapproval. And the first one says that the P values can indicate how incompatible the data are within a specific specified statistical model.

Speaker2: [00:06:49] And that’s a loaded statement. And the statistical models they’re talking about is the null hypothesis. And the null hypothesis is what’s supposed to have accounted for the data in your trials or experiments as if nothing were occurring. And I’m going to show you how it doesn’t say anything about what should be occurring. It only says what should not be occurring. The P values have been interpreted as a probability of the null hypothesis being valid, and that’s not what it is. The NASA made that clear. It says that your scientific conclusions should not be based only on whether the P value passes a specific threshold. That threshold is usually called alpha, and the typical value is most common. Value, perhaps is 0.05. It says the proper inference requires full reporting and transparency and you don’t get full reporting and transparency just looking at the outcome as if nothing were happening. Okay. So the p value does not measure the size or effect of a result of an experimental result. The p value does not provide a good measure of evidence regarding a model or hypothesis. Now, Greggs had me review a number of papers and a number of books. My books reviewed by him include Taubes Good Calories. Bad Calories. Sears Zone Diet. Tom Siegfried’s cancer is a metabolic disease. Tim Noakes. Tim. Oh, hi.

Speaker2: [00:08:45] You’re behind the podium. I see Tim Noakes waterlogged and papers by the McCray’s and a number of other people. And. My reviews have not been successful. I did review papers. Papers from. A divorce study. And I did communicate with attorneys on some of my findings. But I have no follow up from the attorneys of whether my views and comments ever got into the case law or not. I have no idea. But. My review of. Of. Tom Siegfried’s book hit a stall and it hit a stall because of this assay release. I was having trouble going through his some of his claims in in the book because they relied on p value. And I didn’t know what to do about that. And while I was struggling and communicating with Tom Seefried, actually, we had some email exchanges. God, really a pleasant guy and fun to talk to. But when I came to this, I came to the point where RSA had disapproved that use altogether. And Greg said, That’s when I have to come here and talk to you about that and tell you the rest of the story. So at the bottom of this assay, at the bottom of their list is a note of special note as the following article, and it’s by Greenland. And. I need a magnifying glass here. Maybe it’s that one. Okay. Uh, the Greenland, which is included in the assay critique by reference, says that the attention to detail that’s required by scientists seems to tax their working discipline.

Speaker2: [00:11:09] And he says, it’s cognitive demand of an epidemic of short definitions and interpretations. The result in science is which is wrong and sometimes disastrously so. And that these misinterpretations that come about from use of the p value and the null hypothesis and statistical significance, he says dominate, they say, dominates much of the scientific literature. That’s a huge condemnation, and it’s included by the American Statistical Association by reference. Now, the American Statistical Association is the champion of what you would call fisheries. That is Ronald, a fishers type of statistics. He is the man. He’s made some immense contributions to science and statistics. He’s quite a mathematician. He was a little bit short on giving people credit and a little bit short on defining his words. He liked to use what we call ostensible definitions. He would just give a description. You’re supposed to understand his description and get the definition of the words out of his description. So it was very difficult, man to read. But he created the null hypothesis. Named it the null hypothesis in 1937. And in 1937, in the papers leading up to 1937, in the mostly in the early twenties, he was working with a fella we know by the name of student. His name was William Gossett. And William Gossett worked for a brewery. And the the story that goes around is that the brewery didn’t want his name attached to any scientific papers because he worked for the brewery.

Speaker2: [00:13:14] So he published a paper on what’s called a student tea distribution. That’s called that way now anyway. And he published under the name of Student under the pseudonym A Student. His T distribution lets the statistician estimate the difference in mean between a sample that’s taken an experiment and and other means the actual the true mean of the of the distribution. And so he worked Gosset worked closely with with Fisher. Fisher was quite enamored with that and made a lot of use out of the student tea distribution. And Gossett was I guess he was a student or attending a Cambridge. And he came to know Egan personally. Pierson. Egan Pierson. And Egan Pierson was the son of Karl Pierson, spelled with a K or a C, depending on what time you look at his life. And. And Egan was afraid that his father disapproved of him, that he wasn’t successful enough for his father. He was in a doctoral program in his late twenties, and he he came to know Gossett and he asked Gossett, Oh, he knew that Gossett was working with Fisher and he asked Gossett if if he could help him, if Gossett could help Egan. Learn what he called Mark to statistics. This was at a time in in our history between the world wars where we didn’t have, you know, version zero, version A or, you know. Whatever you whatever the current language is, and we call things mark one, mark two, mark three, mark four and Eggen sorted the statistics of his father were mark one statistics, and he wanted Gosset to teach him how to do the kind of statistics that he that Gosset and Fisher were doing together.

Speaker2: [00:15:42] And he called that mark to statistics. So Gosset went away for a while and studied the problem, and he came back and he said that he doesn’t think that Fisher is doing the right thing at all, that you can’t determine the results of an experiment only by looking at the null hypothesis that you have to look at the probability of getting the answer that you think you got. As if the effect were actually there. Well. Egan said. I think you’re on to something. He talked to his father about it, thought about it some more. Got back to Gossett and he said, This is too mathematical for me. I want you to see my friend Jerzy Nyman. And so Gosset took the problem to Jersey. Naiman Jersey name, an agreed Jersey name and got back together with Eagan person. And within two years of being told about this issue that you ought to be looking at two probability distributions. They published their first paper and it was on the what’s now called name and person statistics. I think it ought to be called Gosset Statistics. Gosset didn’t write the paper, but he sure provided the idea in the first place.

Speaker2: [00:17:17] Now. We’re going to move ahead here. What you’re getting is the executive summary here of a very long presentation. One of the characters on my list of ten. One of the events of science was the work of Newton in his Principia mathematica. And I want to draw on a little bit on what he said, famously said. He said, If I saw further than anyone else’s, because I stood on the shoulder of giants and I have taken that seriously and tried to look back through the history of the people that contributed to where we are today in science and statistics. And I put them on this chart. The bar is the length of their lifetime, their life span, and on the upper, you can barely see a little sawtooth line up here on the chart. And above that is a different time scale altogether. It’s a time period from 500 B.C. to 1300. And if you ever tried to plot that, you don’t know what to do about zero. It comes out and there is no year zero. So anyway, I call it plus or minus one on the chart. If you if you read The History of science, it starts with Aristotle. There is a man, Spartacus, who preceded him in life and lasted a lot longer than he did in death. And Pontius was an astronomer, a Greek astronomer. Amazing thing. A man with no optical instruments whatsoever.

Speaker2: [00:19:23] And Spartacus figured out that the Earth rotates on its axis. That’s something that people still have trouble with. Some some people think that he may have been aware of that the of heliocentric city, that the sun was the center of the solar system. But that can’t be proved. But Aristotle deserves to be called the man of the father of science. He is the first person to divide science into its various disciplines. And if you go to Google and you Google any big scientific subject like biology and Aristotle, you’ll come up with a story about Aristotle is the father of biology, and you can pick almost any subject you want. And Aristotle wrote something about it and had something to say about it. But Aristotle was an observer and he brought into into science not just its division, into the different fields. He also brought logic into it. And his logic included induction and induction is a big problem in the evolution of the science that I’m going to talk to you about that I’m talking about. Aristotle recognized that in in the real world, there are processes going on that have cause and effect and each one has another cause and effect. And he would extrapolate backwards in cause and effect until he got back to an original cause, a first cause, as the translators do today. Call it today. And that. Logic stayed with science for almost 2000 years. And the man who changed it was Bacon.

Speaker2: [00:21:29] Francis Bacon. And he’s he shows up below the dashed line, just below Copernicus. And Bacon shows up first because he was born so soon. But he didn’t write anything of any significance until 1620, which was, I think, six or eight years before his death. So he was a pretty senior guy by the time he wrote anything, and he had available to him the work of Galileo, the work of Descartes, possibly the work of Descartes. Descartes published after him. But Descartes was in there working at the same time. And I put in there, Harvey, because I thought that Harvey might well have had been influenced by what Bacon was saying. When Harvey did his work on the circulation systems, I tried to find out if they’d ever met and talk, and I found out that Bacon was a patient of Harvey. So while Harvey published much later than Bacon, they were probably on good speaking terms and he got a lot of help. It was a giant standing on the shoulders of a giant. What Bacon said was that. The Aristotelian logic, which goes back to first causes. He called it silly and childish, which is not to Bacon’s credit, but but he said that the what we can do in science is no more than look at a single cause and effect, because in a single cause and effect, we can measure both the the state of our system before and the state of the system afterwards.

Speaker2: [00:23:24] We can be witnesses to the process. But in the Aristotelian model, we can’t be so bacon introduced to science cause and effect. He took it out of the hands of the infinite regress, it’s called in Philosophy of Aristotle, and broke it into little bite sized pieces. And that has stayed with us ever since. The cause and effect cannot be measured. We postulate it. We guess what it is in words of some of the scientists. And and then we test and see whether our guests helps us predict anything with our scientific model. Newton is on the chart. He’s the next red line after bacon. And Newton is extremely important because he introduced causes in the terms of force momentum and gravity where gravity was force at a distance. And these are things that are just part of our everyday knowledge today. But there were things that are not immediately evident. Newton appears to have taken Bacon’s teachings very seriously and demonstrated it. The. The first man to have used the p value apparently was Laplace. French mathematician. I’m skipping over. Bayes. Bayes is the guy that gave us what’s called Bayes Theorem. And that’s how what’s called today. Conditional probability comes to pass. Fisher was a decided opponent of that kind of probability theory, but Fisher didn’t have the modern probability theory that didn’t come about. It was published in 1933, and it was sometime after Fisher was writing his stuff.

Speaker2: [00:25:43] So. Let’s let’s move ahead. If I can make this cursor move. Okay. I’m going to I’m going to be a slave to the charts. In. A paper by a GUPTA in the Stanford Encyclopedia of Philosophy. He identifies these six different kinds of definitions real nominal, ostensibly stimulative, descriptive, intentional and extensional, descriptive and explicit, which is just a mixture. And as I mentioned, the ostensible distribution number three was something that’s very common in Fisher’s work. But there’s another kind of a definition which I call the formal definition. And it has three parts. The first is the definitive defended them. That’s the thing that you’re going to define. And we want to define science. And the genus is the category of things that it belongs to. And I propose, from what I’ve read of these these papers and in practice that the genus is that science is a branch of knowledge. I’m going to come up with Popper. I like to call him Popper and I shouldn’t. But Popper said that science cannot be regarded as a branch of knowledge. So I’m going to step on his toes immediately. And the next thing is the differential, which allows you to distinguish between science as a branch of knowledge and anything else as a branch of knowledge. Okay. And my definition that I propose is that science is the objective branch of knowledge, and it’s the branch of knowledge that makes predictions that can be validated.

Speaker2: [00:28:00] And those are the criteria that make science what it is. Up until the time of bacon and as practiced until Popper came along, Popper came along. The next word. I’m going to use that to define a fact. And a fact I propose is a statement in science that comprises a complete observation reduced to measurements and compared with standards. Now, that is a really harsh item, a harsh definition for science. But the definition I’m going to give you has some hooks in it, some control knobs that you can vary. If you don’t like my definition of science, what you need to do is change the definition of a fact so that other things can become evidence for whatever it is you’re trying to model, whatever it is you’re trying to experiment. Now I claim, from what little I’ve given you so far, that you can derive that science. Prop scientific propositions describe experiments. They take you from existing facts to future facts. And if there are any good if those facts actually come true, then the scientific model has become validated. Okay. Now. Let’s move ahead here. I want to go back to. This personality chart here, the notables. In science. From. Let’s say in the 18th century, there were extreme. Exceptional advances in physics. And by the way, I want to say that if my definition of science seems to be strongly physics oriented, it is and it is because the physics came first in in providing us a working model for our definition of science.

Speaker2: [00:30:43] And the second thing is, if if it’s too strict, feel free in your own work to adjust to what a fact is and whether or not you’re predicting facts from facts accordingly according to whatever your model is. The model, for example, of facts, casts great doubt on whether psychology can be a science at all. And there are papers written by psychologists for and against the proposition that psychology is a science. Okay. In the. In the 18th century. Am I getting the right century in the. No. In the 19th century. While the great strides were being made in science. Ernst Mock came along and Ernst Mock was a pretty fair scientist physicist and also considered himself a philosopher. And he saw that science was pulling away from philosophy, that it was becoming distant to philosophy, and he wanted to see it brought back into philosophy. He he discussed this with people at the University of Vienna, and they created the Ernst Mock Society. Ernst Mock died, I think, in 1918, and the society survived him for a few years. And it turned into the Vienna Circle with exactly the same people who were on the Ernst Mock Society were meeting to discuss whether or not science should be modified to make it a philosophy, or philosophy should be modified to make it a science or none of the above.

Speaker2: [00:32:46] It turned out none of the above one, but. While that society was meeting. Karl Popper was sitting on the sidelines at the University of Vienna and was never invited to speak at any of the Vienna Circle meetings. And yet other people that he considered competitors like Wittgenstein, were invited. And. So he set about to solve the problem that he thought the Vienna Circle people were trying to solve. Now. What he did was he ignored what Bacon had written. I wonder whether. Popper ever read Bacon at all? But he said that bacon supported this infinite regression kind of inferences induction and. Bacon did not. Bacon broke away from the Aristotelian induction. So Papa said that he needs you need a criterion, what he called a criterion of demarcation so you can tell the difference between science and a non science. And what he said was required to do that is that you have to be able to turn a scientific statement into its falsification. And he is Pope Popper is most well known, I think, for his falsification criterion. Well. If they had if they had a decent definition of science in the first place, it would have it would have taken care of the problem. So. Popper. Changed the definition of science in several ways. He said that there’s no way that a single man can be objective. And in that he may have been true. He may have hit the truth. Therefore, he said, the only way to determine whether or not a scientific proposition is valid is to do it through inter subjectivity, testing, inter subjectivity, and that involves multiple people working together as a consensus, as peers and a peer review structure and in publication, in professional journals.

Speaker2: [00:35:45] Um. At the same time Fisher was saying that. You can’t judge an experiment by whether or not its results are valid. You can only do the null hypothesis, and that is to look at it as as if it were invalid. And it’s the same story as Popper was saying, and Fisher was putting it into statistics and Popper was putting it into into philosophy. Yeah. Well. We’re coming to the end of the story. And the the end of the story is that. The end of the story is that the name and Pearson’s statistics were a reaction against and in contradiction to the Fisher statistics were only the null hypothesis occurs. I’m going to give you one couple of pictures here. And and get off the stage. This is Fisher’s decision model of the null hypothesis. You have some sort of a probability density, and what the density looks like depends upon what statistic you use. If you look at the difference of the means or you look at the variance ratios, whatever you look at, you get a different kind of a curve. Fisher said. You can decide whether or not your results are statistically significant by looking at the shaded area, and if the shaded area is small enough that your your scientific result is valid.

Speaker2: [00:37:47] But that has nothing to do with the actual experiment. It’s just it’s just missing the experiment. What? Gossett said. To do that, you had to look at not only the null hypothesis called H zero here, but also H. Everybody calls that the alternative hypothesis. I don’t like that because it’s the alternative to the null and it puts all the features of this of statistics on the null. It’s the affirmative hypothesis. It’s the hypothesis of the experiment that you’re making, of the tests that you’re doing. Okay. And it took. It took Nieman Pierson and the work that has continued since then to establish a threshold. And then we look at the ratio of the P value, which is the shaded area to the right of the of the threshold line versus the probability of a type two error. And Fisher said, you don’t ever need type two errors. That’s a bunch of garbage. Okay. But that’s that’s the mode this is the mode of where we’re going with statistics. And if the disapproval of the assay is taken to heart, you’re going to see that this is the kind of model that you’re going to have to make for any statistical. Experiment that you want to conduct. Okay. So I’m going to leave you with that. And. Return this to the. Master of ceremonies.

Let's start with the truth!

Support the Broken Science Initiative.

Subscribe today →

2 Comments

Leave A Comment

You must be logged in to post a comment.

recent posts

Expanding Horizons: Physical and Mental Rehabilitation for Juveniles in Ohio

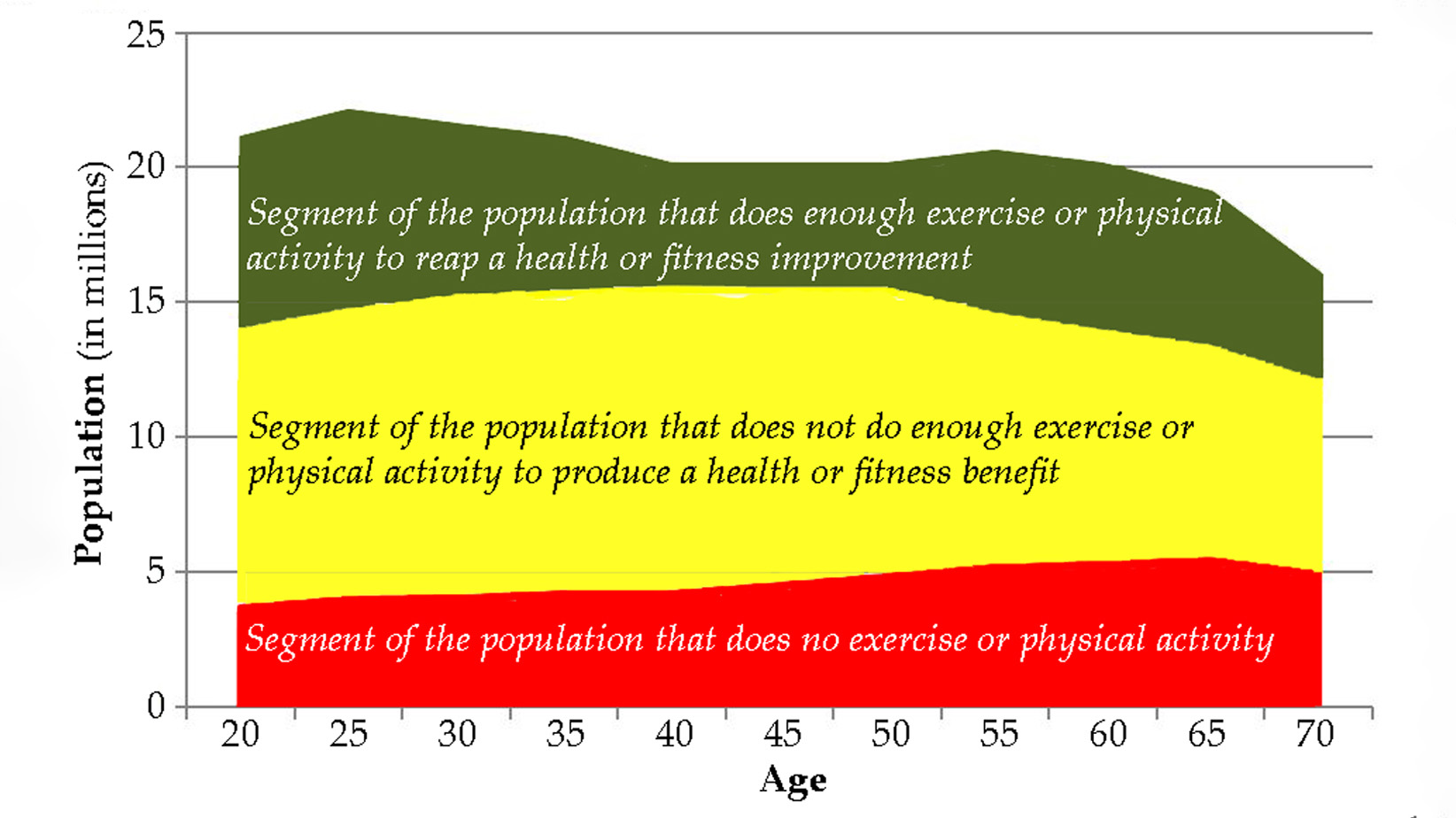

Maintaining quality of life and preventing pain as we age.

Video is listed as private

Thank you. The link will be been updated.