Summary

The authors of this paper, Briggs, Nguyen, and Tramifow, acknowledge the well-recognized problem inherent with p values and Bayes factors. These industry standard methods lead to over-certainty and belief that cause has been proven when it has not. As a solution, this paper proposes a return to a traditional probabilistic method consisting of three steps:

Making direct predictions from models to observables.

Weighing evidence by its impact on predictive strength.

Verifying predictions against reality.

The wish of any scientist conducting a hypothesis test is to assess the truth and utility of a hypothesis. Both current methods fall short of this goal. P values cannot assess the truth of a hypothesis by design, as they were intended only to falsify a hypothesis. Bayes factors focus on unobserved parameters, so they can exaggerate the strength of evidence. A key point the authors make is that statistical models can only find correlations between observables, but they cannot identify causal relationships.

This is well-known among statisticians, but many researchers believe a hypothesis test does what its name implies: test the validity of a hypothesis. Even though a p value cannot judge the truth or falsity of a hypothesis, many scientists use it in a ritualistic manner (as described by Gigerenzer). The authors believe p values are so misused that they should be abandoned. Their critique of Bayes factors is that they focus on parameters, whose certainty always exceeds the certainty of observables. However, any uncertainty in the parameters feeds into the model and amplifies the uncertainty of the observables.

The philosophy presented in this paper emphasizes that probability is conditional and not causal. It focuses on observables and measurable factors that influence observables.

The authors share their own schema for making quantifiable predictions, the following formula:

Pr(y ∈ s|X, D, M)

The formula calculates the probability of y, which is a member of subset s (y ∈ s). For example, y could be a specific side of a die and s would be the set of sides: 1, 2…6.

On the right side of the vertical bar, you have the conditions of the probability calculation. D is optional and stands for any relevant existing data. M is the premises that make up the model. And X represents any new measurement values.

The process of model building usually starts with defining M. Any premise relevant to the probability and its logical relations to other premises must be defined. This is a process that substantially involves the judgment of the researcher to determine which premises are relevant and which are excluded. The authors lament that researchers rarely test these assumptions.

Model builders should test their models by seeing if each additional premise has a measurable impact on the probability y at some point x. If a premise does not have any effect on predictions, then it is rejected. The paper points out that ardent advocates of p values and Bayes factors do not stringently follow their own rules. To do so would require them to calculate a p value for every possible hypothesis before ruling it out. There is an endless supply of potential hypotheses, so this process would never end. The method presented here is consistent with its own rules.

An advantage of this approach is that estimates of unobservable parameters are unnecessary. This prediction-based process unifies testing and estimation. This approach can both test the model itself and the relevance of data fed into it.

The authors present two applications of this method. Both are adapted from papers that had been written using conventional methods. The first was a study of how well people recalled the brands shown in the advertisements before a movie. It investigated whether the genre of movie impacted the participants' recall. There was a significant p value detected for the drama genre, but not for the others. The published paper touted the finding that brand recall was enhanced by watching dramatic movies. The authors' own analysis uncovered differences in probability based on the sex of the viewer and genre of movie. Some of these were unnoticed in the p value analysis. The authors leave it to readers of this paper to decide if these differences are meaningful.

The second example makes predictions of academic salaries based on department, sex, years since PhD, and years of experience. They present a standard ANOVA analysis and compare it to their own predictive ANOVA analysis. The results are much more clearly interpretable. And the method itself is more flexible and can better answer questions of interest to decision makers.

The authors conclude that this predictive approach, while superior to conventional hypothesis testing, does not solve all problems. Researchers still yearn for automation and definite answers. This method requires more work and does not elicit a magic number denoting significance. Instead, it calculates easily understood probability values, which can be used to make decisions. A good model will facilitate good decisions. Furthermore, the inner workings of a model are made transparent by this method.

The most important point is that models must be tested and verified. This is no guarantee that a theory is true or unique, as it is always possible to generate more theories to fit a set of data. But it does make it likely that a model that has performed well in the past will continue to be useful in the future.

Scientists do experiments to test ideas called hypotheses. They want to know if their hypotheses are true. To find out, they often use math to figure out p-values or Bayes factors. These numbers are supposed to tell a scientist whether their hypothesis is true or not.

But there are big problems with these tests. They make scientists way too sure they proved something, when they really didn't.

So the authors of this paper want to test hypotheses in an old-fashioned way:

Use models to make predictions.

Judge evidence by how well it improves predictions.

Check if predictions match reality.

The authors came up with a formula to do this:

Pr(y ∈ s|X, D, M)

This calculates the chance of y being in a set s, based on:

X = new measurements, D = existing data, M = the model.

The authors even have a math formula that helps them calculate the probability of an event or idea. It involves putting together old measurements, new measurements, and every factor that could change the probability of the outcome.

Before a scientist even builds a model, they have to decide what factors might make it work better and which don't do anything. They test each factor to see if it makes the model better or not. If not, they remove it.

This method is better because it tests the quality of a model while it's being built.

The authors gave two examples using their new method on old studies. In both cases, this new method revealed insights the old methods missed. Even though it is better, scientists might not use it, though, because it takes more work and doesn't give a yes/no answer. But it does give easy to understand probability values that can help make decisions.

A key point of this paper is that models must be tested against the real world. This doesn't guarantee its predictions will always be right. But models that have worked before will likely work again.

In summary, this new statistical method focuses on testable predictions, not proving hypotheses. This method answers the types of questions real people have and helps them make better decisions.

--------- Original ---------

ABSTRACT. Classical hypothesis testing, whether with p-values or Bayes factors, leads to over-certainty, and produces the false idea that causes have been identified via statistical methods. The limitations and abuses of in particular p-values are so well known and by now so egregious, that a new method is badly in need. We propose returning to an old idea, making direct predictions by models of observables, assessing the value of evidence by the change in predictive ability, and then verifying the predictions against reality. The latter step is badly in need of implementation.Scientists often use statistical tests to try to prove if their hypotheses are true. Two common tests are p-values and Bayes factors. But these tests have big problems. They make scientists way too confident that they have proven something, when they actually haven't.

So the authors of this paper want to go back to an old-fashioned way of testing hypotheses:

Make predictions using models.

See how much evidence supports the predictions.

Check if the predictions match reality.

When scientists do a test, they want to know if their hypothesis is true and useful. But p-values can't say if a hypothesis is true. And Bayes factors focus on things we can't observe directly. So both methods are flawed.

Here is the formula the authors suggest instead:

Pr(y ∈ s|X, D, M)

This calculates the probability of y being part of a set s, based on:

X = new measurements, D = existing data, M = the model (which includes all of the factors relevant to the prediction).

The first step in building a model is deciding which factors, or premises, might affect the probability. Test each premise to see if the probability changes with that premise included. If it doesn't, get rid of it.

This method is better because it tests the model as you build it.

The authors show two examples adapted from old studies. In both cases, this new method gave them extra insights the old methods missed. It takes more work to do it this way and it doesn't give a simple "yes or no" answer, so scientists might not try it. However, it gives simple probability values that can be useful for making decisions.

The key point of this paper is that models must be tested against reality. This doesn't guarantee a model is perfect. But it means models that have worked before will likely work in the future.

In summary, this statistical method focuses on testable predictions rather than trying to prove hypotheses. It gives scientists a tool to answer research questions people are actually interested in knowing.

Homeschool:

--------- Original ---------

ABSTRACT. Classical hypothesis testing, whether with p-values or Bayes factors, leads to over-certainty, and produces the false idea that causes have been identified via statistical methods. The limitations and abuses of in particular p-values are so well known and by now so egregious, that a new method is badly in need. We propose returning to an old idea, making direct predictions by models of observables, assessing the value of evidence by the change in predictive ability, and then verifying the predictions against reality. The latter step is badly in need of implementation.Let's start with the truth!

Support the Broken Science Initiative.

Subscribe today →

recent posts

Expanding Horizons: Physical and Mental Rehabilitation for Juveniles in Ohio

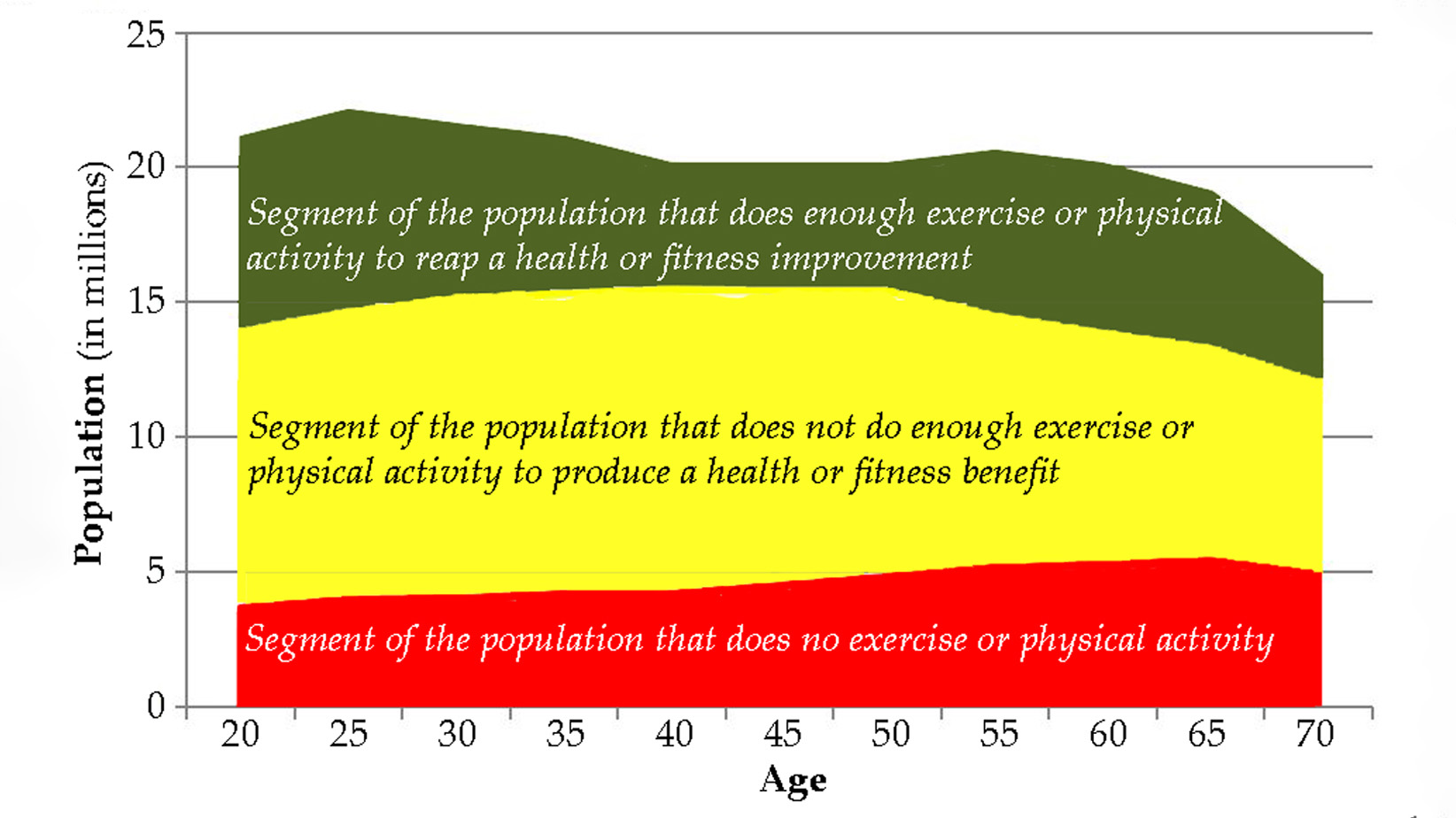

Maintaining quality of life and preventing pain as we age.