Def Con is a reminder, not a revelation. A gathering of hackers, engineers, and researchers in Las Vegas to stress-test the systems the world relies on, and to show, again, that none of them are bulletproof.

As a software engineer I didn’t expect magical tools or impenetrable defenses. That’s not what this field is about. What stood out was the reinforcement of what security experts have always known: security isn’t a product you buy. It isn’t a checkbox. It isn’t something you ever “finish.”

It’s a continual process, awareness, testing, adapting, and Def Con underscored just how necessary it is to treat it that way.

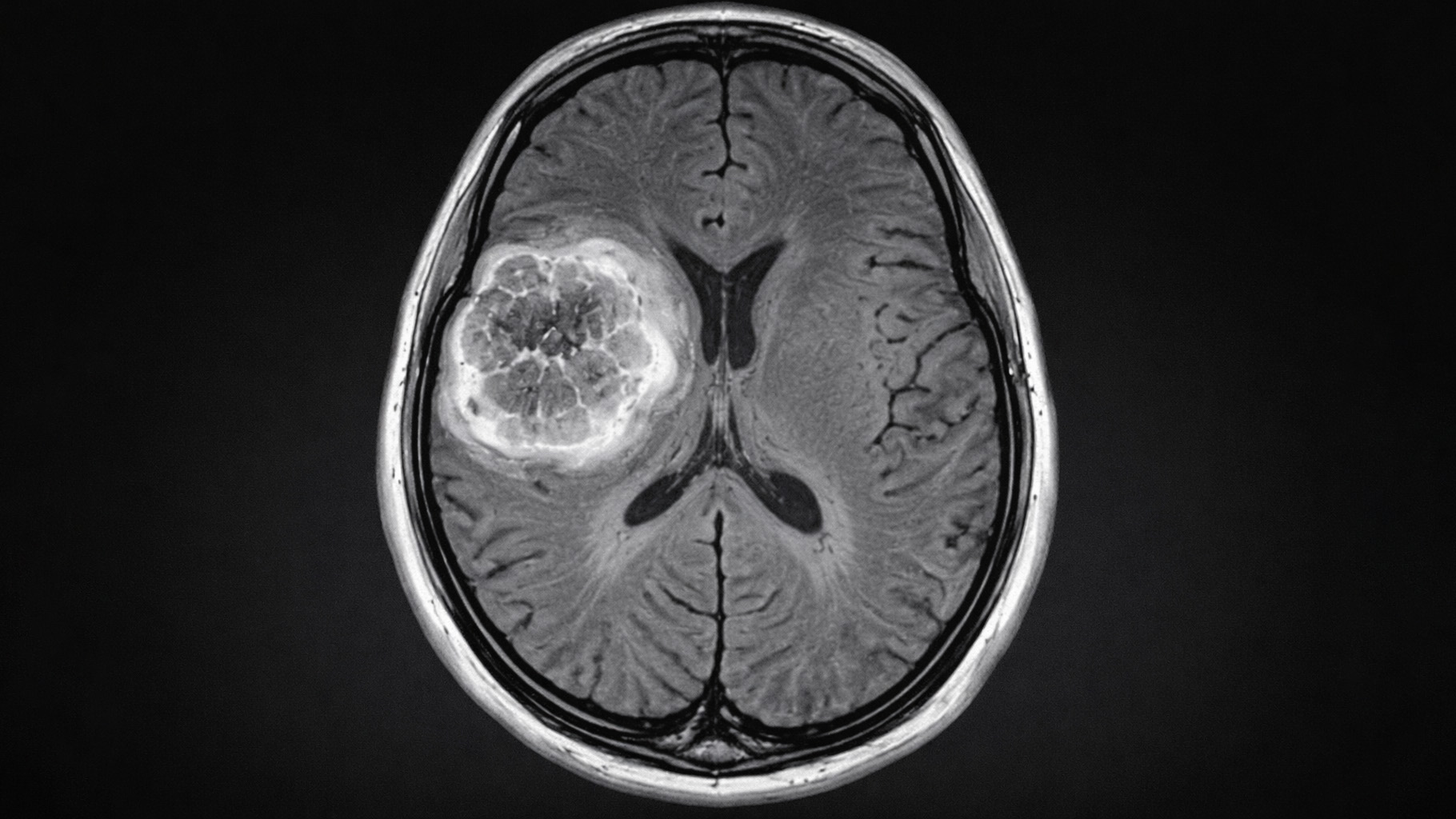

Hospitals Running on Hope

One of the events I joined was a hospital-themed hackathon. The exercise wasn’t meant to shock, but to demonstrate the obvious risks hiding in plain sight.

Prescription dispensers could be manipulated. Hospital computers ran outdated software. Critical records lived in systems that should have been retired years ago.

It wasn’t fiction. It wasn’t exaggeration. It was a live demonstration of what many in the field already suspect: the healthcare sector is running too much infrastructure on hope and outdated machines.

And even if you patch software, encrypt files, and retrain staff, you can’t remove the fundamental issue, too many people, too many points of failure. Medical data passes through too many hands to ever be considered entirely safe.

The hackathon drove the point home: in healthcare, the stakes are high and the security is vulnerable.

AI and the Pink Salt Problem

If hospital systems showed the fragility of our infrastructure, AI case studies showed the fragility of our judgment.

Consider the viral scam: AI, Pink Salt, and the Problem of Plausible Nonsense.

A fake video of Oprah Winfrey promoting a pink salt and lemon juice “detox” circulated online. It wasn’t Oprah. But it looked and sounded convincing. The warmth in her voice. The pauses. The little crinkles in her eyes. It felt real enough to bypass skepticism.

This wasn’t entertainment. It was fraud.

The difference between this and earlier deepfakes, like the Tom Cruise TikTok videos from 2021, is intent. Those were uncanny but playful. This was calculated. The pink salt scam weaponized trust. It used AI not just to imitate a face, but to tell a story, complete with testimonials, urgency, and emotional hooks designed to disarm.

And it worked.

Trust as the Attack Vector

That’s the point security professionals already know: technology doesn’t need to be flawless to succeed in an attack. It just needs to exploit the weakest link, our trust.

Voice-cloning scams show this too. A parent gets a frantic call that sounds like their child, begging for help. Jail, hospital, kidnapping, it doesn’t matter. A few seconds of real audio are enough to train AI into producing something convincing. And once the emotional response takes over, logic doesn’t stand a chance.

It’s not about hacking machines. It’s about hacking people.

The Collapse of Filters

What makes all this worse is the collapse of reliable filters.

News outlets chase outrage clicks. Academia struggles under the weight of fraudulent studies, over 10,000 retracted in 2023 alone, many tied to fake data or AI-generated text. Peer review is stretched thin. Journalism is compromised by speed.

The institutions we once relied on to separate truth from fiction aren’t keeping up. If a blue checkmark, a journal name, or a byline can no longer be trusted, the burden shifts back to us.

That isn’t a surprise. But it is a reality we need to keep at the front of our minds.

The Only Defense That Holds

If there’s a single throughline between hacked hospital computers and fake Oprah weight-loss pitches, it’s this: the only real defense left is critical thinking.

Not paranoia. Not cynicism. But deliberate, practiced skepticism.

- Check the source. Verified accounts, press releases, or trusted channels.

- Google the quote. If it’s real, others will report it. If it’s fake, others will warn you.

- Look past the polish. Professional design and high production value mean nothing. AI can fake slickness.

- Ask who profits. Someone always does.

Security can not be bought in the form of browser extension or a shiny product. It’s a habit. A mental immune system you build over time.

What Def Con Reinforces

The hospital hackathon wasn’t news to anyone in the field. It was proof. The pink salt scam wasn’t unexpected. It was confirmation.

Security professionals already know the truth: risk is permanent. Systems will always be vulnerable. People will always be susceptible. The point isn’t to eliminate risk, but to recognize it, question it, and adapt.

Def Con didn’t surprise me. It reminded me.

Security isn’t about guarantees. It’s about vigilance.

And in a world where anyone can fake a face, a voice, or a trusted source, the strongest firewall we have isn’t in our machines.

It’s in our minds.

Support the Broken Science Initiative.

Subscribe today →

recent posts

And more evidence that victory isn’t defined by survival or quality of life

The brain is built on fat—so why are we afraid to eat it?

Q&A session with MetFix Head of Education Pete Shaw and Academy staff Karl Steadman