By Russell Berger

Over the last few years, a series of high-profile retractions, resignations, and lawsuits have shaken academic science. In 2018, Brian Wansink, the head of Cornell University’s Food and Brand lab, resigned after the American Medical Association retracted six of his studies. Wansink’s work was highly influential, serving as the basis of the Obama administration’s “Smarter Lunchrooms” school lunch program. In July, Stanford president Marc Tessier-Lavigne resigned amidst allegations that he falsified data in his Alzheimer’s research. In October, Harvard behavioral scientist Francesca Gino was placed on administrative leave after independent investigators claimed she falsified data. She is now embroiled in a defamation lawsuit against her accusers and her university. At the beginning of 2024, Harvard president Claudine Gay resigned after extensive plagiarism was discovered in her published work. Currently, Harvard’s Dana-Farber Cancer Institute plans to retract six papers and correct an additional 31 following an investigation into claims of misconduct. The papers were authored by some of the institute’s most venerated researchers, including DFCI president and CEO, Laurie Glimcher. These cases, and others like them, are driving attention to an issue many have been trying to sound the alarm on for years: Scientific misconduct.

In 2021, Richard Smith, former editor in chief of the British Medical Journal and chief executive of the BMJ Publishing Group from 1991 to 2004, warned that “…the time may have come to stop assuming that research actually happened and is honestly reported, and assume that the research is fraudulent until there is some evidence to support it having happened and been honestly reported.” Smith claimed that scientific misconduct is not a problem of a “few bad apples” but an infection of the entire orchard. Others seem to share his concern. Recently, public figures like Bill Ackman have called for a review of all academic work done by tenured staff at MIT, Harvard and other top schools. The Office of Research Integrity has also announced plans to revise their policy on Research Misconduct and aims to strip away some of the secrecy that plagues university investigations.

But not everyone agrees on the extent of the problem. Jeremy Fox, an ecologist at the University of Calgary, claims that scientific misconduct is rare, pointing to anonymous surveys in which only 1-2% of scientists admitted to misconduct. The obvious problem with this approach to measuring misconduct is that it’s not a measure of how many scientists commit misconduct but a measure of how many scientists admit to misconduct. This is further complicated by the fact that there are varying definitions of what scientific misconduct is. Most definitions include, at a minimum, the classic triad of fabrication, falsification, and plagiarism . This is the definition drafted/used by The National Science Foundation and used by the Federal Government.

The NSF defines fabrication as “making up data or results and recording or reporting them,” falsification as “manipulating research materials, equipment, or processes, or changing or omitting data or results such that the research is not accurately represented in the research record” and plagiarism as “the appropriation of another person’s ideas, processes, results, or words without giving appropriate credit.”

While FFP is a helpful starting point, it is limited in scope. Too often, the FFP schema fails to capture deceptive or fraudulent behavior that doesn’t fit neatly into one of these three categories. A scientist failing to report a significant conflict of interest, for example, would not be considered misconduct based on FFP. Yet, it is well known that conflicts of interest can affect the objectivity of research. Recognizing the narrowness of FFP, many organizations have adopted expanded definitions of scientific misconduct. Canada’s definition of scientific misconduct retains the FFP triad, but also includes: “destruction of research records” and “mismanagement of conflict of interest.” Others, apparently hesitant to label certain behaviors as “misconduct” acknowledge a gray area known as “questionable research practices (QRPs)”. The European Code of Conduct for Research Integrity goes even farther, affirming the FFP triad, and listing sixteen other “violations of good research practice,” including inappropriate use of p-values, inaccurate citation, and salami publication (deceptively producing multiple papers from the same research in order to increase publication rates).

A 2009 study, How Many Scientists Fabricate and Falsify Research? A Systematic Review and Meta-Analysis of Survey Data, found between 5 percent and 33 percent of surveyed scientists reported having personal knowledge of a colleague fabricating or falsifying data. When the survey questions replaced the terms “fabrication” and “falsification” with gentler phrases like “data misrepresentation” or “altering” research to “improve outcomes” between 6.2 percent and 72 percent gave affirmative answers. One plausible explanation for the range in these responses is the discrepancy of definitions. Researchers who are merely concerned with avoiding FFP may be unaware that their colleagues have crossed the line between cleaning up “noise” in their data and dishonest manipulation. Variations in the way definitions of misconduct treat QRP’s is a prime suspect in this confusion. In his article, Measuring the prevalence of questionable research practices with incentives for truth telling, John Leslie notes “QRPs, by nature of the very fact that they are often questionable as opposed to blatantly improper, also offer considerable latitude for rationalization and self-deception.”

Another approach to defining scientific misconduct is by broadly appealing to scientific consensus. For example, the Wikipedia entry for scientific misconduct defines the term as “…the violation of the standard codes of scholarly conduct and ethical behavior in the publication of professional scientific research.” In contrast to the enumerative approach, this definition identifies misconduct as (allow me to restate it slightly for clarity) a deviation from accepted norms among scientists. This is not new. In the 1980’s, the federal government attempted to address scientific misconduct by establishing the Office of Scientific Integrity, which defined misconduct as FFP, plus “…other practices that seriously deviate from those that are commonly accepted within the scientific community for proposing, conducting, or reporting research.” While this component of the definition was dropped in the early 90’s, many institutions continue to use this language, or employ similar appeals to what is “commonly accepted” as their standard for measuring misconduct.

There are two significant problems with a consensus definition of scientific misconduct. First, truthful scientific endeavors may completely overturn “commonly accepted” practices in the scientific community, as we wrote about last year, The Dangers of Singular Authority. Genuine innovations in measurement technology, statistical tools, and processes can and do disrupt what is commonly accepted among scientists. Second, practices that are commonly accepted among scientists may in fact be deceptive. As it turns out, the wikipedia definition of scientific misconduct proves to be both illustrative of this point, and deeply ironic.

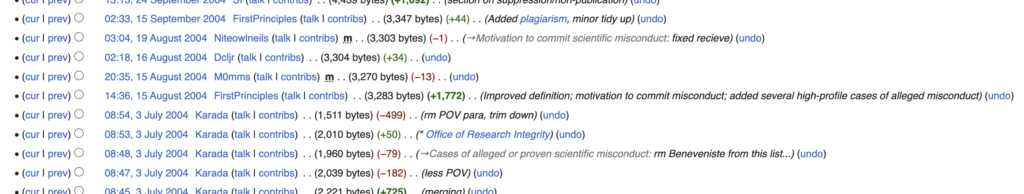

Wikipedia’s definition was first published by an anonymous wikipedia editor in 2004 (note this date!).

The author gives no citations for this definition, implying his contribution is an original work. As expected, a google search for this exact string of words garners dozens of results. Most of these are blogs or online articles (some probably written by AI) quoting the wikipedia definition, and all occur after 2004. This is to be expected if our anonymous author was the first to write this definition. But his exact words were also repeated in at least four, peer-reviewed academic articles on the subject of scientific misconduct. All of these occur without proper citations, and all well after 2004.

This 2016 paper, titled “Research Misconduct: The Peril of Publish or Perish” published in the Oman Medical Journal, quotes the wikipedia definition, but does not provide the wikipedia article as a source. Instead, (and I’m not making this up) it cites the Consensus statement on Research Misconduct in the UK by COPE and the BMJ, a document that does not include the quoted definition or anything like it.

This 2021 paper, titled “Publication ethics: Role and responsibility of authors“ published in the Indian Journal of Gastroenterology does the exact same thing. The authors quote the wikipedia definition, and also cite a scholarly article that does not include the quoted definition or anything like it.

This 2016 paper, titled “Fraud, individuals, and networks: A biopsychosocial model of scientific frauds” published in the aptly named journal Science and Justice, uses the wikipedia definition, but provides no citation at all.

This 2016 paper, titled “Responsible Conduct of Science and Possible methods for avoiding Scientific Misconduct in Egypt” was presented at a conference in Cairo. It also quotes the wikipedia definition and gives no citation.

The authors of these works, while endorsing a consensus approach to defining scientific misconduct, are guilty of plagiarism. Some even appear to have used dummy citations to cover their tracks. If we take a consensus-approach to defining scientific misconduct, can we say this is wrong? Remember, the consensus approach defines misconduct not in terms of deception, but by deviation from standard scientific norms. Even if we assume plagiarism of wikipedia is uncommon, can we say the same of plagiarism more generally?

In 2009, the International Journal of Exercise Science began screening submitted manuscripts for originality. They found that a shocking 46% contained plagiarism of some kind. A more recent analysis of several hundred COVID-19 papers published in infectious disease journals between 2020-2021 determined that well over 40% were plagiarized, with one paper copying a whopping 85 sentences from another work. By all appearances, plagiarism is a commonly accepted practice among scientists. Any attempt to define misconduct by appealing to consensus is therefore self-defeating.

In 2009, the International Journal of Exercise Science began screening submitted manuscripts for originality. They found that a shocking 46% contained plagiarism of some kind. A more recent analysis of several hundred COVID-19 papers published in infectious disease journals between 2020-2021 determined that well over 40% were plagiarized, with one paper copying a whopping 85 sentences from another work. By all appearances, plagiarism is a commonly accepted practice among scientists. Any attempt to define misconduct by appealing to consensus is therefore self-defeating.

In summary, the enumerative approaches to defining scientific misconduct are far superior. These approaches give specific examples of objectively deceptive behaviors, and give consideration to the possibility of legitimate error. Even so, these approaches will always fall short of capturing every deceptive thing a scientist might think to do with his research. After all, scientists are human beings, and like the rest of us they are not just susceptible to moral failings, but are innovators of them. We should expect to encounter new forms of deception and fraud as researchers learn to avoid the more blatant forms of misconduct found in official lists. In other words, no taxonomy of scientific misconduct will ever be perfect. Scientists should be content with this, as their own discipline involves continually refining and correcting models in light of new realities (predictive outcomes). The goal is not to find absolute certainty but to limit uncertainty wherever possible, with regards to definitions this means moving from subjectivity–like that in consensus–towards objective measurable outcomes.

Russell Berger is a contributing writer to the Broken Science Initiative, with a focus on scientific misconduct. Previously, he served as an in-house investigative reporter, lecturer and corporate representative for CrossFit. His work has successfully uncovered scientific fraud and misconduct in the health sciences, both in the United States and internationally.

Support the Broken Science Initiative.

Subscribe today →

recent posts

Q&A session with MetFix Head of Education Pete Shaw and Academy staff Karl Steadman