Summary

Gerd Gigerenzer's paper criticizes the lack of attention paid to effect sizes and the undue emphasis on null hypothesis testing in research. Despite the American Psychological Association's recommendations, effect sizes are rarely reported, hindering the computation of statistical power in tests. It highlights a 1962 study by Jacob Cohen, which revealed that published experiments in a major psychology journal had only a 50% chance of detecting a medium-sized effect, yet this didn't affect researchers' attitudes towards effect sizes. In fact, 24 years later, an even smaller percentage of papers in the same journal mentioned statistical power. This paper also addresses Richard Feynman's assertion that null hypothesis testing is meaningless without predetermined alternative hypotheses and condemns the practice of 'overfitting' or retrospectively mining data for significant findings.

The common practice of fitting models to data makes the subsequent hypothesis testing questionable.. Overfitting, the term used to describe this practice of using known data to fit a model in hopes of producing certain outcomes, can result in impressive explained variances, but neglects the noise amount and rarely involves testing and validating a model on new data. Linear multiple regressions are described as a ritualistic application of statistics and the paper suggests that simple heuristics (such as testing a model on new data) can sometimes deliver more accurate results. The author likens the prevalence of null hypothesis testing to social rituals, asserting that this ritualistic mindset stifles critical thinking about the research process.

The paper concludes by stressing that researchers should eschew mindless adherence to statistical rituals and carefully select an appropriate statistical procedure from the “toolbox.” This involves paying proper attention to effect sizes, predefining alternative hypotheses, avoiding overfitting, and moving away from stubborn reliance on null hypothesis testing. Gigerenzer argues that statistical theory should be viewed as a set of tools to be applied intelligently and judiciously. He suggests that descriptive data analysis is often more useful than decisive statistical procedures. Challenging the status quo in academic research will promote the rise of statistical thinking.

This paper, written by Gerd Gigerenzer, talks about issues with how we use numbers and data in science. It explains that many researchers aren't focusing enough on what really matters, the effect size and power of their experiments.. The American Psychological Association says that researchers should always tell people about effect size and statistical power, but they often don't. Without these, it is hard to know if a result is actually important.

A long time ago in 1962, a study found that there was only a 50/50 chance of researchers noticing a medium-sized change in a psychology experiment. Even though a lot of researchers know about this problem, they keep doing the same mindless statistical procedures.

A famous scientist named Richard Feynman didn't like the way we often do science experiments. He said that just because we find something interesting, it doesn't mean a lot if we can only explain it after it has already happened. We need to predict first and then see if we find it.

The paper also warns about 'overfitting.' This happens when we hope to find important things in our data and then start making up stories to explain what we found. This isn't a good way to test ideas.

The paper also talks about how we should use new data to check if our ideas are right or wrong. Instead, scientists often use complicated statistics to validate their ideas. Sometimes, a simple analysis is better than a complicated one.

When scientists analyze the results of their experiments, they often perform a ritual (like following a recipe to bake a cake), where they follow the instructions without really thinking about what they're doing.

The paper suggests that we should treat statistics (the science of using data) as a tool box, and pick the right tools based on what we need. We should teach students this so they can think carefully and make smart choices. Sometimes, a simple tool is the best one for the job.

Lastly, the paper teaches that we should not just blindly follow the rules in statistics. We need to focus on effect size , make a prediction before running an experiment, not make up stories after an experiment, and not blindly follow standard procedures, like null hypothesis testing. We need to carefully choose the best statistical approach for the problem being studied. To make these improvements, researchers need to be brave and question the way things are done, even if it might upset some people.

--------- Original ---------

"Statistical rituals largely eliminate statistical thinking in the social sciences. Rituals are indispensable for identification with social groups, but they should be the subject rather than the procedure of science. What I call the “null ritual” consists of three steps: (1) set up a statistical null hypothesis, but do not specify your own hypothesis nor any alternative hypothesis, (2) use the 5% significance level for rejecting the null and accepting your hypothesis, and (3) always perform this procedure."This paper by Gerd Gigerenzer talks about problems in how we use statistics in research. It says that many researchers aren't paying enough attention to effect size, which tells us the strength of an experimental result. The American Psychological Association (APA) suggests that this should always be reported, but often it isn't. When we don't know the effect size, it's impossible to calculate the statistical power of an experiment. An old study from 1962 found that we only have a 50% chance of catching a medium-sized effect in a psychology experiment. Although lots of people know about this issue, it hasn't really changed how researchers do their work.

The famous scientist Richard Feynman criticized how we often analyze results of experiments. He pointed out that just because we find something that looks significant and passes a statistical test, it doesn't mean much if we didn't make a prediction to start with. That is just making up a story after the fact.

Gigerenzer also warns about “overfitting.” This is when we keep analyzing data until we find important results and then make up stories to fit what we found. Just because we can make our models fit existing data, it doesn't mean we're testing our ideas very well. Really complicated statistical procedures can even fit random noise to our model, so sometimes a simple statistical analysis is best. Even better is testing an existing model on new data.

The paper describes how researchers often conduct a statistical analysis of their experimental results as if they are performing a ritual. They aren't thinking carefully about what they are doing, instead they are blindly following a procedure. They are eager to find a small p-value, which determines whether they can publish their results or not. Most scientists are taught to believe that a small p-value means an experiment is “significant,” but even the inventor of the p-value advised against this.

The paper suggests that we should teach students to think of statistical theory as a toolbox, where different tools should be used depending on the situation. Sometimes, a simple and clear data analysis is not only sufficient, but better. To fix this widespread problem, researchers need to be brave and challenge the existing methods, even if it might upset some people.

In the end, Gigerenzer advises that we shouldn't just follow rituals in statistics without thinking. Instead, we should always report effect sizes, make predictions before running experiments, not make up stories to fit the data, and not blindly conduct null hypothesis tests. He emphasizes that we should use a wider range of statistical procedures and carefully select which ones are appropriate for each experiment. Gigerenzer calls this “statistical thinking” and encourages more researchers to try it.

--------- Original ---------

"Statistical rituals largely eliminate statistical thinking in the social sciences. Rituals are indispensable for identification with social groups, but they should be the subject rather than the procedure of science. What I call the “null ritual” consists of three steps: (1) set up a statistical null hypothesis, but do not specify your own hypothesis nor any alternative hypothesis, (2) use the 5% significance level for rejecting the null and accepting your hypothesis, and (3) always perform this procedure."Let's start with the truth!

Support the Broken Science Initiative.

Subscribe today →

recent posts

Expanding Horizons: Physical and Mental Rehabilitation for Juveniles in Ohio

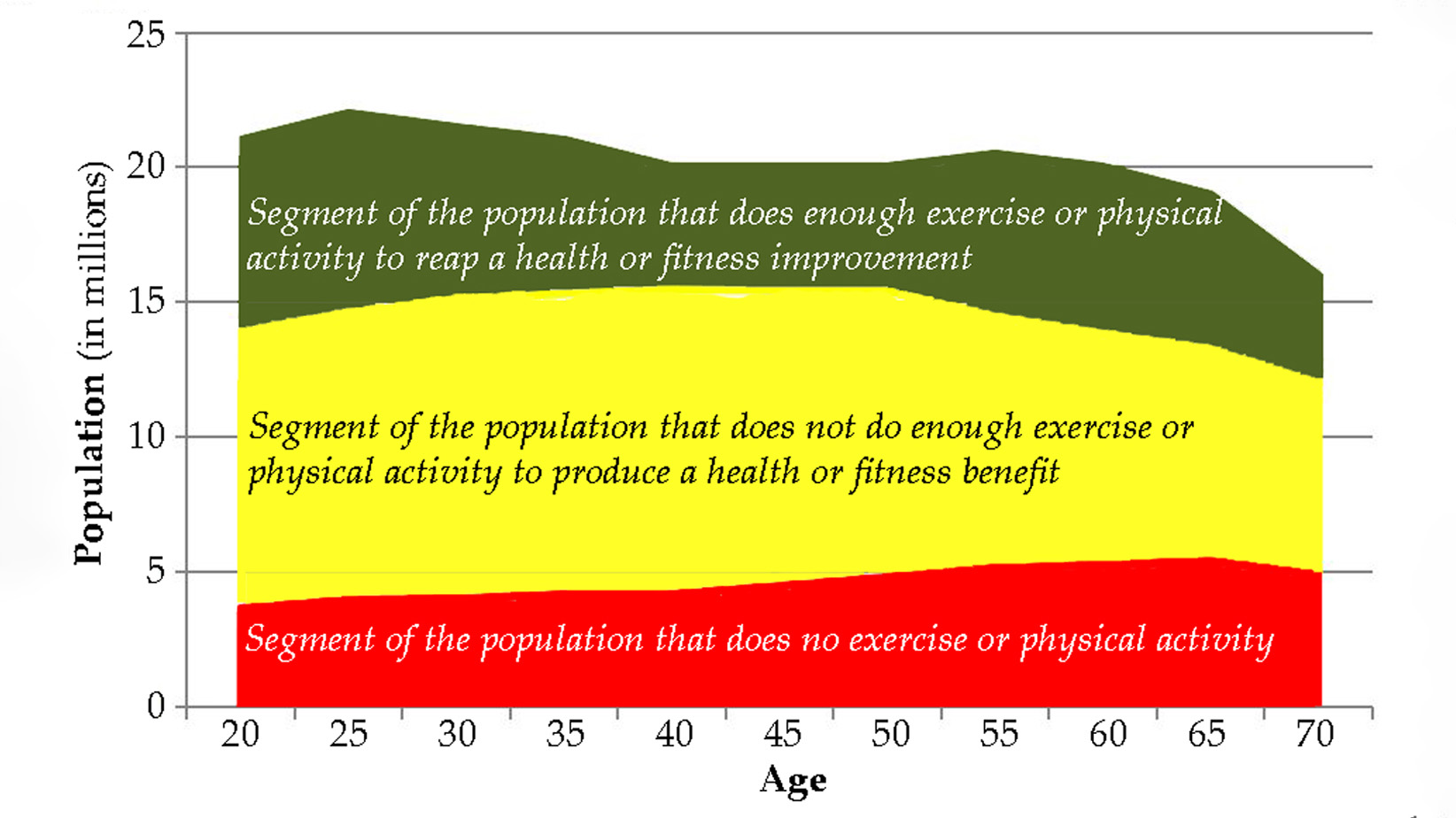

Maintaining quality of life and preventing pain as we age.