Greg Glassman kicked off the 2024 BSI Epistemology Camp with this presentation. Greg’s talk centers around the ‘breaking point’ from modern science to post modern science. Condemning the rise in popularity of philosophers of science like Karl Popper, Paul Feyerabend, Thomas Kuhn, and Imre Lakatos, Greg praises those critical of them, such as E.T. Jaynes and David Stove. Greg calls out the misuse of statistical methods like P-values, and advocates for a deeper understanding of probability theory.

Greg also highlights the need for individuals to protect themselves from scientific misconduct and corruption within science, offering no solution for the systemic problem, but rather education for any man against its pitfalls.

Greg’s presentation here was the first of several in a two-day event in early March, 2024. Look for the other talks and subsequent discussions on the BSI website and YouTube channel in the coming weeks.

Transcript

EMILY KAPLAN

Welcome to Epistemology Camp. We are really excited to have you all here, and hopefully you’re going to leave this weekend with a sharpened idea of how to critically think and look at the world. I just want to stop for a second because I said to Greg, I feel like there may not be another gathering of people happening in the world today with this kind of collective brainpower. This audience is ramped up when it comes to intellectual capabilities, and that is not an accident. We picked you all, hand-selected each one of you because of your ability to think critically and understand complex material, which we’re going to expect of you today. But we also have complete confidence that you’ll be able to master the material and go back to your communities and share it with other people because that’s really what this project is all about. You’ll start to see when you get this information that you read the news differently, you’re able to talk to your doctor differently, you’re able to process information in the world and make decisions we hope in a far better way. The ultimate goal, in some ways, is to protect yourself from the vulnerability that seems to be lurking everywhere in terms of the sort of corrupted nature of science, and then, as an extension out into all kinds of other facets of the way that we navigate our lives.

So in the last, you know, sort of nine months or year, we have seen so much tumult in the scientific community, and I don’t know whether that’s because we’re getting better at assessing up these problems or there’s just far more of it. But in any case, as an individual, it greatly behooves you to try to educate yourself so that you can identify those kinds of problems.

Last year we met at the Biltmore Hotel and had a great event. We launched with a flash; some of those speakers are here today.

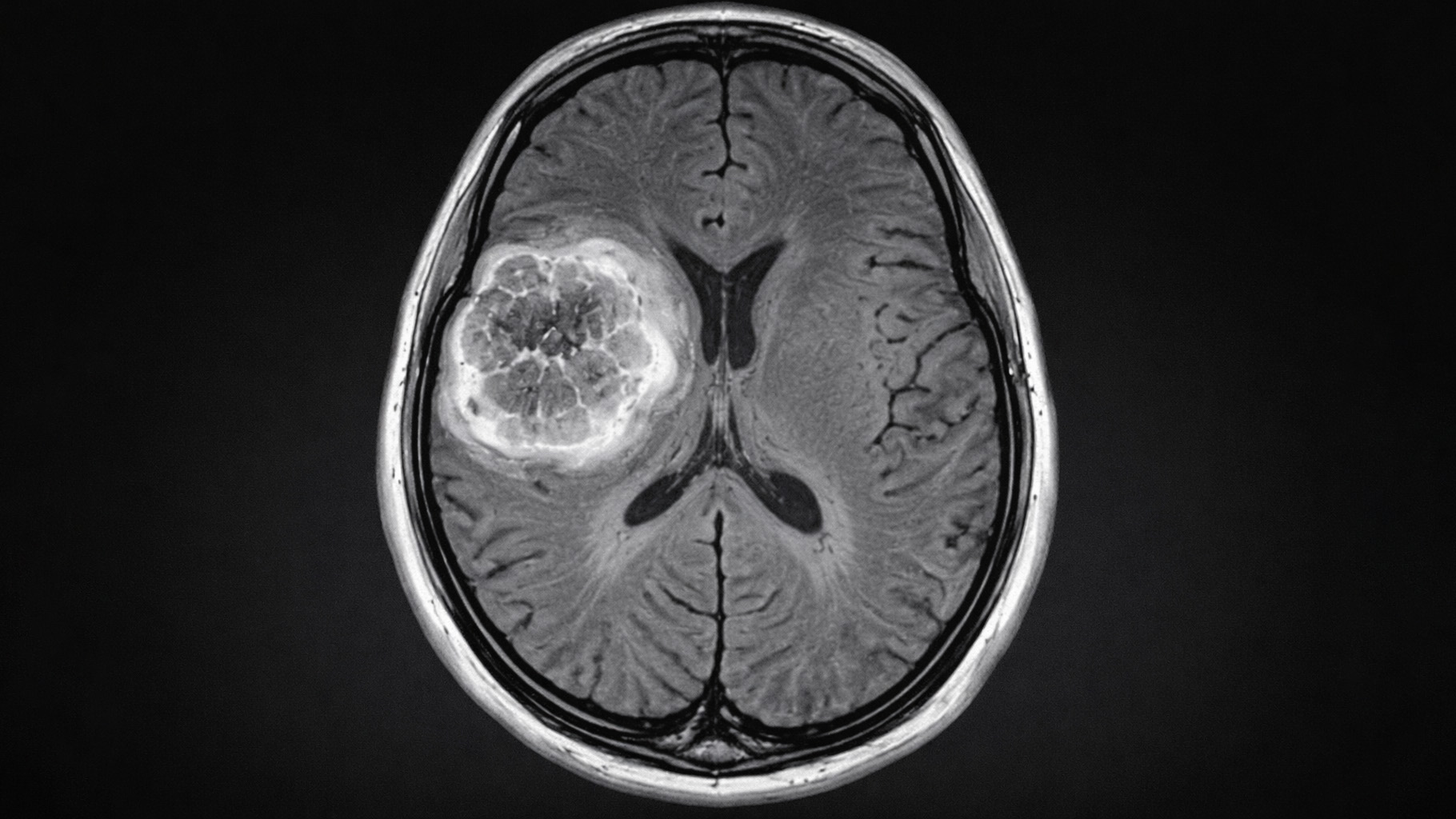

One of the things that I talked about last year was an Alzheimer’s scandal that had really rocked that community, where we found that they had been photoshopping images. Science magazine exposed those problems, and it was sort of unbelievable to think that some of the groundbreaking research in Alzheimer’s had been photoshopped, and all subsequent research had been relying on that. So here we are a year later, and we’ve had a very similar scandal happen in Boston at the Dana-Farber Cancer Institute, where they have been manipulating images, copying and pasting —This isn’t even really high-tech— images from day one and then putting them later in the treatment group, as though to say these tumors didn’t grow.

Now that’s mind-blowing to imagine that this stuff is happening at places like Harvard, and at the same time, we have lost both Stanford’s president and Harvard’s president within a year to charges of scientific misconduct. Now, if you had told me 10 years ago that we would lose those two presidents to scientific misconduct within a year of each other, I would have laughed. I would never have believed it.

Now the big difference this year is that our little team at Broken Science has been breaking some of those Dana-Farber stories. We’ve been telling them, and I think we’ve been doing a far better job than any of the mainstream news outlets. So I couldn’t be more proud of our team for doing really in-depth research quickly and sharing that information through our newsletter, which is growing leaps and bounds, probably because of all their hard work, and putting it out on all of our channels.

So I think in that way, Broken Science is already having a pretty profound impact on the space, and I think we will only continue to grow from there. So I think the need for Broken Science has never been greater. You guys are all here because we know the power of the individual is going to be far greater than trying to go through policy changes or any of the other avenues that traditionally you might try.

Independently, Greg and I have been looking at health, science, corruption, for a couple of decades, and in the last four years, we’ve been able to dive much deeper into these problems and really look at the philosophy of science.

Many people point to these sort of what I would call outputs of this break, so things like peer review, the replication crisis, why we have so much more money than any other industry from Pharma going into Congress and influencing policy. We have ineffective drugs. We’re overprescribing dangerous drugs unnecessarily. But as far as I know, the Broken Science Initiative is the only group that has been able to go back and not identify any of those problems as the root cause because they’re not. We’ve gone back and we’ve traced through history and through the philosophy of science where the break happened and why.

I honestly don’t think there’s any other collection out there of people who understand this or are able to share it the way that we’re hoping to. So that’s really exciting, and you guys are all part of this.

I also think it’s important to recognize where we are today. We’re in this, you know, last year we were at this fancy hotel, and today we’re in a construction site. Right, it’s a little different, but the truth is that this gym represents a lot. It was a dream of Greg’s as a kid to build his own house, and here he is doing it. But also, the gym really is symbolic because Greg’s foray into all of the broken science stuff started when he started applying the lessons that he’d learned from his father about science to the fitness realm.

Things like defining terms, measuring things, making sure you can repeat your work—that was non-existent in fitness until Greg came in. And he revolutionized the space because of it. He revolutionized the space… and created some enemies. So, the NSCA was the main competitor to CrossFit, and they used a peer-reviewed journal to publish fake data that said that Greg’s methodology caused injuries. Greg immediately recognized that the data was fake, so he sued, and he won. A federal judge called it the largest case of scientific misconduct and fraud she’d ever seen.

Well, that led him into a real interest in the ills of modern medicine and how these tools were being used for private interests. And it’s from there that he’s just gone upstream and now is looking at the whole thing in totality. He has recognized that predictive value has left the space. And the frequent approach that we see so pervasively in science today, especially in medicine, is what has led to all of these problems.

So, without further ado, Mr. Glassman.

[Applause]

Thank you.

GREG GLASSMAN

I’ve become that guy with a big stack of papers and books.

Can you believe it? Look where you’re at.

I’ve got a lot of good friends here because I don’t know how else you get someone to come to something you’re calling Epistemology Camp. So the Mariachi is going to have to be really good, and the tacos really good to make this a bearable thing.

I watched my father talk on P-values in Madison, Wisconsin, four or five years ago, five or six years ago. He delivered what should have been a 15-hour lecture in 45 minutes, and we were all looking at each other wondering what had happened. It’s a rough subject. It’s a hard thing to get people interested in, it’s a hard thing to describe. It’s just pretty rough. But I’m not discouraged. If you do a lot of seminars, and I’ve done a lot of seminars, you speak to crowds long enough, you learn that there’s this inherent tension in any delivery. You want to get a lot of information across, but you would like how much of it sticks matters. So a high load with low retention, and everyone writing furiously and they walk away with 13 pages of notes but not sure they heard anything. I’ve had that experience and I’ve delivered that to people before.

In fact, in our own seminars that we did over time, they became simpler and simpler. And it was all modulated by this realization that what you need to do is give enough information to pique some interest or enthusiasm and make the thing inspirational, if possible, to some extent that there’s further inquiry and interest. That’s what you’ve got to do.

So you might even come down to like, well, if there’s only one word that everyone picked up, what would that be? I can tell you here it would be ‘prediction.’ And if I were given two words, I’d say ‘science predicts.’ If you give me a third, I’d say ‘science is prediction.’ And if you can give me a fourth, I’m going go all out and say ‘science is successful prediction.’ Add another word, ‘uniquely’ successful prediction. We’ll show you tomorrow. We’ve got some cool artwork. I’ve got a whole list of things that have promised to foretell the future, to predict.

It’s a crazy list. I don’t want to spoil that, but there’s a lot of fun stuff in there. I have in there ‘modern science’ and I have in that list ‘consensus science.’ The ‘consensus science’ has failed to predict, thus non-replicable, and ‘modern science’ can and will predict, which gives us a reason to talk here.

Let’s define some terms. When I speak of science without any kind of modifier, I presume I’m talking about modern science, the stuff that does replicate, the stuff that has been so successful. Science that has its origins perhaps from Aristotle to Bacon to Newton and forward.

The Broken Science, that’s not my term, and I always thought at one of these someone would say, “What do you mean by science?” and “What do you mean by broken?” I didn’t pick the terminology. There was some semi-popular media (Reason magazine, is that mainstream media?) that almost, huh, yeah. Ronald Bailey wrote a great piece on the replication crisis, and I’d read a lot on replication crisis, and in the mainstream media, popular media, it was referred to frequently as broken science.

And then of course, there’s the replication crisis, which is another term that received considerable attention. But the funny thing about a replication crisis is it’s kind of like if you find out the cops knock on the door and your son’s been jacking up liquor stores, and now you have a behavior crisis, you know? I mean, it didn’t start there, right? There’s something else going on.

There is something going on within the replication crisis beyond the replication. And what I’d like to leave you with, if I could be so fortunate, I’d like you to leave at the end of today and tomorrow realizing that there is no rational expectation of replication given the approach. There’s no rational expectation of replication. I understand the hope, I understand the desire, I understand the need, and I understand that if you’re conducting modern science, let’s say you’re working at SpaceX, you know where things have to work, that’s a different kind of scenario than others.

Broken in that it won’t replicate, but the problem is that the break is epistemological, and where I think much of the conversation gets stilted is odd in that we’re not defining what it is that got broken. And so, I’m going to do that here today. I’m going to give you a quick look at what science is, and I think that’s going to make it really easy to see and look at the nature of the break.

There are three influences, and I’ll go over these a little deeper later. My father, Jeff Glassman, was a head of research and development, internal research and development, at Hughes Aircraft Company and had a whole career there. I’m an aerospace product as much as any rocket or missile. I mean, the local bowling alley was Rocket Bowl, and our local park was Rocket Dine. All my kids’ parents worked for Grumman, Raytheon, Tadine, Hughes, Boeing, Lockheed, Marietta, and you’d be out on the playground, and you’d go, “His dad works with his dad, and they do suitcase nukes.” And they’d say, “Well, you’re not even supposed to know that,” and I’d go, “Well, I know because your dad used to work with Tom’s dad, and Tom’s dad said everyone in their department went over to Hughes and is working on the suitcase nukes.”

You know, this is playground gossip in elementary school, but I’ll tell you what I got out of it. I sure as hell learned what science is. And by the way, my father maintained that science is one of the few disciplines that must know what it doesn’t know and cannot know. It is bound to be aware of its limitations. For this reason, he said there’s no possible conflict between religion and science. They don’t operate on the same planes, and where you see conflict, you’re misunderstanding, perhaps both.

So, the three influences: Jeff Glassman, we call him the Science Bear. I know there are some people in here who have had direct exposure to the Science Bear. Another one of the things that my dad got me to do, and by the way, I made my mark in physical education because recess was the part of school I liked. And when your dad’s the Science Bear, you probably can’t get further away from him than on the playground, right?

I want to go outside and play, and I found myself at 45 years old, a trainer in a gym, actually wondering what I was going to do when I grew up. And it kind of hit me, I think you grew up, and you’re doing it. And then I started to think of it in a little more detail. I don’t know where it came from, but I decided that I should turn observations into measurements, make predictions, and have them validated on the basis of their success. And what came out of that was that constantly varied high-intensity functional movement increases work capacity across broad time and modal domains.

And that simple little truth in its expression gave me multigenerational wealth. So I might owe something to the old man in the lessons I hid from and took them to places where I find no application. It worked out pretty damn good for me, and I’d like to give back to that.

My dad said that academic science was so bad you have to read David Stove to really appreciate how bad it is. And I fought that with everything I had. After I had academics commit full-scale…. In fact, the academic response to constantly varied high-intensity functional movement increases work capacity across broad time and mortal domains, was felonies. In the form of perjury. A chain of emails where they suborned the falsification and fabrication of data. And we got our hands on the emails. Imagine the shame in that.

Sock puppeting reviews in the peer-review process. I mean, it was all as bad as it could possibly be. And that kind of stimulated me.

I mean, you spend $8 million chasing down shitty academic science. Might ought to take a look at the books my dad was telling me to read. And I did. I went through David Stove. I’ll confess, it took me a while. These three books, I think six, seven years, probably two, three hours a day, over and over and over again. With each reading, I got more out of them. We’ll come back to that.

The Broken Science crew actually owns the rights to this book, and I think it’s one of the most powerful things ever written on the philosophy of science. One of our speakers here actually worked with and was friends with David Stove. What an honor. Anton, what an amazing thing to have done. These are dead guys, and we’ve got some people here who have worked closely with these dead guys. It’s good to know you while you’re here. When I was done with Popper and when I was done with David Stove, I felt quite confident that science grounded in probability theory. He convinced me of that. And what I did from there, what that meant or what the implications were, I wasn’t sure.

I was also wonderfully convinced that there would be no value to reading Popper, Kuhn, Feyerabend, and Lakatos, the mainstream of philosophy of science. But I did anyways, and I read it with that jaundiced view, looking for the “shitty parts” that David Stove said I would find. When I read it, indeed I did find them. There it was. But this book is so cool. There are two sections and five chapters, increasing in complexity. You’re given reasons to not even look at that material.

The first one I’ll make is the sabotage of a logical expression, doing things like putting quotes around words like “prove,” “truth,” and Stove likened it to “fresh fish” with quotations around “fresh.” It’s even worse than that because what you know is that something’s intended other than what we all think the word does mean. And so now I have to project myself into the reading. Don’t worry, don’t, unless it’s for fun. That’s not what good reading should look like. That’s not what non-fiction should look like. There should be an assumption that the words mean what we all presume them to mean. When I start throwing quotes around stuff, it gets off base quickly.

But I came away convinced of the foulness of academic philosophy of science. And I’m going to mention here, I talk about academic science, and I know Gerd has always been very careful to exempt the natural sciences from this game. So this is less true of chemistry, pretty much not true of physics, and to a lesser extent biology and the other natural sciences. The social sciences and medicine have been grievously wounded by this bad science.

Being convinced that science found its grounding in probability theory, it hit me one afternoon that if that indeed was the case, I should be able to go over to the probability theory side of the world and find someone who thinks that science grounds in probability theory. It was an easy thing to do. You go to Google Scholar and you start pulling up graduate texts in probability theory. There’s seven or eight. You know you’re at the right place because the book’s $200. So, I ordered all that I could find that seemed to be in regular use.

One of them was E.T. Jaynes’ “Probability Theory: The Logic of Science,” and boy, if a cover title was ever promising, that indeed was. I’ve done only this in the other books. It was this one that I looked up David Stove, I looked up Karl Popper, and I knew I was home. And now it’s been what, four years I’ve had these books, and it’s a struggle.

I can’t say I’ve read it yet, but I can tell you on what page a lot of things are, and I’m making progress. It’s an incredible work because what has happened here with E.T. Jaynes has done, what he accomplished was he gave a method for the optimal processing of incomplete information. Fair enough, Anton? Close?

Brett Horse, the editor of the book, who’s a student of his and also a friend of Anton’s, he said that Jaynes was the first to realize that probability theory as used by Laplace was a generalization of aristotelian logic, that reduced to deductive logic in the special case that our hypotheses are ones or zeros. There’s a hell of a lot there. We could do a seminar on that alone. But the profundity of it is amazing and anyone in the education business, anyone that’s teaching children logic, needs to realize the potential in looking at probability theory as a vehicle through plausible reasoning to introduce kids to that generalization, through probability theory.

And that journey can begin on your first exposure to algebra. We can be off to the races.

Another thing that I got from David Stove in an essay in a collection that was edited by Roger Kimble published by Roger, “Against the Idols of the Age”, there’s an essay in there, “Philosophy of Science in the Jazz Age.” Anton told me the day before yesterday that he thinks that sums up Stove brilliantly. I hadn’t really considered that, but I have to agree. It’s fantastic. But one of the things that’s in there that really caught my eye that I thought was fascinating was he was talking about the quality of writing on science, whether it was from John Stewart Mill or others I hadn’t heard of, or in the popular media.

He said that it was boring, superfluous, that it’s not that any of it was really wrong, but it went to too great a length to say next to nothing, and it’s hard to read. He said it was like old rice pudding, I think is the way it was described. But then he goes on to explain that the reason for that is that he says, by say 1850, the average person has seen enough science, enough progress, enough technology, enough change, that a healthy philosophy of science comes about quite naturally. In fact, it is something that any “drongo” could be taught. And they apologize for the Australianism.

I’ve actually got a “drongo” here. Where are you mate? Stand up.

Yes, I didn’t know what a “drongo” was. I looked it up and it’s a fool or an idiot. It’s also a bird. As soon as I saw Daz, I invited him here. I go, “Daz, I’v got to know what’s a drongo.” He goes, “It’s like a dickhead mate.” And I go, “Isn’t it a fool?” He goes, “Yeah, it’s a fool too.”

I think my dad would have called me a drongo if we lived in Australia because I just wanted to do recess, right? I wanted to do PE. But I like that.

I like that even a drongo would understand it, and so that gave me hope because I think I could therefore explain it to you. It should be that easy. It should be that simple. And I think we can do it.

I was going to give myself an hour, and I even planned to waste time and get sidetracked. Because if it’s something that a drongo could get, it shouldn’t take but 5-10 minutes to do, right?

And we’re going to do it. And it’s sorely needed, much of the talk around broken science. I’ll tell you all of everything that’s going on in the meta-science world is in sore need of someone to stand up and go, “Why don’t we define what it is that’s broken?”

We all see the problems, and nobody likes that your science won’t replicate, the system that produced the science that won’t replicate doesn’t even have the vocabulary, the standards, or the methodology to expect replication. It’s not in the P-values we’re going to learn. It’s not in null hypothesis significance testing.

So what is science? You ready? Time me.

It’s the source and repository of man’s objective knowledge. Source and repository of man’s objective knowledge. It silos in models. These models are graded and ranked by their predictive strength. Their validation comes solely through their predictive strength, and that grading and ranking has them as conjecture, hypothesis, theory, and law.

A hypothesis is a model that is based on all of the data in its domain with no counterexamples, making a novel prediction yet to be validated by facts. When we run an experiment and get validation, a single datum on that, now I can say it’s a theory and explain why because of the predictive success in my experiment.

It’s an enormously creative process. Coming up with the models themselves and the experiments that test them is some of the most creative stuff man has ever done. Let’s talk about the process itself. It’s excitingly simple. It starts with observations.

An observation is a registration of the real world on our senses and sensing equipment. When we tie it to a standard scale with a well-characterized error, it becomes a measurement. We also, at that point, can call it a fact.

If I map that fact or measurement to a future unrealized fact in something that looks like a forecast of a measurement, I have a prediction. Its validation comes from the predictive strength. It sits bracketed between zero and one, soft brackets not to include the zero or the one. That validation is entirely independent of method.

However you came up with whatever it is, E=mc^2, it doesn’t matter. You could have seen it in the pattern in the toilet paper, a bad dream. Whether perspiration or inspiration, the validation is dependent entirely on the predictive strength. That is the probability of the hypothesis bounded by zero and one.

That’s pretty straightforward, isn’t it?

The drongo in me hid from that. I applied it to Fitness and got rich. To PE! Hiding out on the playground.

I want to tell you that there is no rational basis for trust in science that isn’t based on that prediction. And in fact, you don’t have to think too hard to realize that all trust is predicated on prediction.

I could find studies that show… you have to laugh at this, right? But I did. I found studies that showed that predictability is the cornerstone of trust. It makes sense to me. When we trust things that don’t have predictive strength, maybe it looks like faith, right? It doesn’t have to be a positive thing.

There are people that I would trust to steal my money. Where did the $50 go? Jim was here. Well, now I know where the $50 went. That’s trust. It’s predictability.

I did it. Does that sound like a definition of science? It works if it doesn’t work. If there’s something wrong with it, we need to let some scientists know. Because I tell you what, from getting light to lays to millimeter wave radar systems to deep space exploration…

I worked at Hughes for a while. Imagine that. Did anyone here know that I worked there for three years? That wasn’t my place. While I worked there, we ended up with 40% of the satellites in orbit were our satellites. I knew guys that worked warhead matter, propulsion guys, acoustics people, radar people, laser people. Fascinating. And this is how they did science.

Now, what’s really interesting is that many of them couldn’t say what I just told you. And I’ve seen my father do that with scientists, with new hires, with PhDs from Caltech.

Get them in front of a room and play the game with them. He puts up a, you know, define science for me. Write down anything offered. And when you’re done, he puts up astronomy and astrology. He goes, “I’m an astrologer, and I’m going to claim everything that you’ve put here.” Until you can come up with predictive strength of your models, you can’t make the discrimination.”

And by the way, Kuhn threw that at Popper on his demarcation of falsification, which gets us right to the break. You could almost start there. But I think that was a red herring. I think falsification, what he really wanted to do, was modus tollen down to the end zone. Modus tollen: if P then Q, not Q therefore not P. Remember that? That’s the deductivist attempt, and it’s where Popper started.

The true demarcation between science and non-science is predictive strength of models. Predictive strength of models. It is not falsification. You could make an argument for falsification being a requirement for a meaningful assertion. I believe AJ Ayers did exactly that. I’ve dug through my Ayers; I’m having trouble finding it, but I knew of falsification from Ayers that if you couldn’t conceive of an experiment that would show that that was false, that would cause you to change your mind, you weren’t in the space of meaningful assertions.

Any questions?

Let’s talk about the break. I just mentioned it.

What happened was Popper and Kuhn, and Feyerabend, Lakatos, all quite different, yet they held their inductive skepticism that they got from Hume. Hume said…

I’ve got another definition to make this easy too. Anyone want to throw a definition of induction out? Because I have found them all to be tedious and near useless except for one I got from Martin Gardner. Forget the specific to the general and the observed to the unobserved. That doesn’t help me. What helped me, and I saw this was in the early ’70s from Martin Gardner in Scientific American, and he said that induction is where the conclusions come from the premises with probability. And in deduction, they come with certainty. That will serve you well. That will serve you well.

I’m on good ground here, Matt? That’s my math teacher.

I met Matt much the way that I found Jaynes. I said if Stove is right, there’s got to be someone in probability theory that’s seeing what I’m seeing. I’ll tell you tomorrow; I’ll fill in details. But let me tell you how I found William Matthew Briggs.

I put into Google, “if the baby cries, we beat em. We don’t beat the baby; therefore, he doesn’t cry.” And Matt came up on top. I found him through an oblique child-beating reference.

And I knew that there what it is: it’s a seeming to be a bug in the logic, and I’m like who else is talking about this kind of stuff and do they know about Jaynes and boy did they and David Stove and everything else. I think we’re all in the room together here today.

Hume’s inductive skepticism for Poppe became just an outright denial. And it was kind of sad because I think Hume left them holding the bag. Because after his death in the dialogues, we find out that it was what a juvenile affectation. And I always interpreted that way too. I didn’t think he seriously meant it. It’s also been attributed to him that anyone that truly denied induction would be mentally ill. And I think others have pointed that out that it would be very much a kind of craziness to that.

But what someone needed to say to David long ago was, “Yes, nothing can be determined from induction with certainty. It happens with probability. Thank you and sit down.” And we could have avoided a lot.

Even the inference system, and I don’t want to get into Gerd’s space here, but the inference system relied on to support. I mean, you look at what’s in most of these studies that won’t replicate. I often have an alternative hypothesis, scientific hypothesis that’s not clearly stated but implied, right? Then we do the magic on the null with the data and get the low P value and say, “Therefore, we found a causal relationship, and my invisible hypothesis is true.” And it creates crazy uncertainty. It’s wrong. And what they’ve done is they’ve put themselves on the probability of the data side of the fence, while many of them, most of them, will deny that the probability of a hypothesis has meaning.

You can pull that right from MIT’s OpenCourseWare introductory courses on probability theory, and they’ll tell you that the frequentists don’t think the value of a proposition is zero or one.

And we see that when we play this game with this P value, we get the magic number. Then what do we do? We attribute a zero or a one to the thing. Now we get back into the truth table world of things again. It’s a wrong place to be. We know John Ioannidis is barking up the wrong fence just from the title “Most Research Findings Are False.” There could have been so much more hope for what he’s done and for meta-science if the title had been “Most Research Findings Are Improbable.”

I get asked, “How are you going to fix this thing?” I was expected in the fitness space to fix the world’s health and fitness. I had no illusions of anything like that. In fact, it’s kind of a scary thought because I’d have had nothing to do. We’re not going to fix this.

I’ll entertain any… I’m sure someone’s got an idea and I’ll get, “Hey, I know what we’ll do.” You know, we’re going to change institutions and behaviors. I don’t see that happening. But what I do know is that we can spend time with each other, with one another, and I can render you less vulnerable to the ills of crappy science. The science that has removed…

By the way, my first reach out to many of you to Roger, to Matt, to Dr. Garret, I left with, when science replaces predictive strength with consensus as the determinant of a model’s validity, science becomes nonsense. I remember Matt’s line was, “you think?”

It was like, you know, it was one of those things like if you want an argument, you need another subject. And that’s the whole of it right there. That is exactly what’s happened. Predictive strength has been replaced with consensus, and the consensus has concealed in it stuff that looks like rigorous method in P-values and null hypothesis significant testing.

Now, when we take the probability of the hypothesis, remove that as a possibility, we flense from the whole process of validation. We remove the only reason to have faith in the stuff to begin with. And what we’re often left with is, what’s the work product? What’s done with this? What seems to me increasingly it becomes the tools or the weaponry for political or social control.

That’s where the shitty science goes.

Where does the good science go? It’s your iPhone, all that stuff, right? Technology, useful things.

I think we can render fair numbers of individuals largely immune to the expressions of bad science. When someone tells you he has a model that’s impending some kind of doom, it needs to have predictive success to be science. It’s not a scientific model until it has successfully predicted something. I’m going to also ask it to do some retrodiction. I’d like to be able to use it backwards and forwards, please. And until then… nothing.

That’s the break.

I first heard Gerd Gigerenzer’s name from Matt in a talk that you did at the airport. Was that at the airport in Ontario? Where was it?

[Matt] Yeah.It looked like an airport hotel. I hate those chairs… trying to break that mold. I’ve done too many talks in hotels. I try to get away from them.

But he mentioned something that I thought was brilliant, and the name Gerd Gigerenzer, and I had enough German, I hit that G-I-R-D G-I-G-E-R-E-N-Z-E-R. Boom. And the three things that he has written on null hypothesis significance testing, the three articles that we read, I read those over and over and over and over again. I get so much out of that. And over a period of 20-something years, this good doctor, this good professor, sounds the alarm again in a wonderfully brilliantly written… A lot of these people, my dad was a good writer, Stove was a good writer, you’re a good writer, Jaynes was a good writer. I think that’s all very interesting. Gerd you’re a fantastic writer. Anton, I think you’re a good writer. Not sure yet, but I think so. I think so. Yes.

Yeah, if you haven’t read the three papers that we’re suggesting you read, read them tonight and come back with questions tomorrow. One of the things that I got out of Gerd that was so cool was he turned me on to… he was quoting studies that showed that kids are being taught that people believe things about P-values that just are not true. Interestingly, about what’s commonly believed, that first of all that it categorizes, it’s not a random assemblage of misconception. There’s your standard-issue misconceptions, and they almost all falsely suggest something about the probability of a hypothesis.

We desperately want to know about the hypothesis; mostly we don’t care about the probability of the data. In fact, it seems to me when we’re studying with tremendous effort the probability of data, we’re doing statistics. And when we turn that into something that can objectively report on the probability of the hypothesis, now we’re in the space of science. And you can feel the desire to be there.

Here is a book. Was this in popular usage? Yeah, the guy’s CV is very impressive. He seems like he was a good guy. Jum Nunnally, but he’s got “Introduction to Statistics for Psychology and Education”. And in the space of, believe it or not, I mean, I have to read it each time. Did it really happen in two pages? Well, not 194 to 196, so three pages. He states as fact three of the common misconceptions regarding P values.

Each of them suggesting something about the probability of a hypothesis that it cannot do. I love the Ethical Skeptic on P values. He called them ‘mildly inductive.’ Is there someone here that knows who that is? I don’t mean the name, like, “Oh yeah, it’s Tony Brown.” No, okay, I’m trying to find out. I know someone who knows him. I would be a part of uncovering that, but it’s curious as hell.

We’re in an age where there are widely regarded authorities that remain anonymous. They’ve got pseudonyms, and they carry weight. We don’t know where the Ethical Skeptic’s from, where he lives, what he did, or what company he runs. But it’s good enough for us. He’s known by his work. This is something, by the way, we figured out in running a virtual company. In the work-from-home scene, you became known only by your work product, and it was easier to shirk showing up every day than it was when no one came in. So when someone asks, “What does she do?” Nobody knows. Uh-oh, that’s a problem.

Any questions?

Let me see if there’s something else I want to hit while I’m here.

Corruption. There are two definitions of corruption. One is the unethical behavior for compensation, right? Right, that’s kind of an obvious one.

There’s another one, and it’s the alteration of the structure of something where it alters its function, like a corrupted computer file. Just randomly change a one to a zero and a zero to one might make it better, unlikely, but it’s likely to function differently. And that’s a corruption.

In the discussion of replication, the corruption thing comes up a lot. I know Emily dealt with it here. I want to make this point. The epistemic debasement, the flensing of the validating step of predictive strength and replacing that with null hypothesis significance testing in P values, that corruption of the scientific method invites, nourishes, and conceals corruption two.

I’ve got people in the industry going, “Wait a minute. You’re saying if I can get a scientist or two or three of them on a masthead and fund a study and get good P values, we’ve got science that shows Coke is good? You’re not eating enough donuts. Yeah, we can do that.”

You’re kidding me?

“Yeah, okay, we’ll be there.”

They’re there. That is what’s happened. That is what happens.

You buy the scientist, you pay for the study, you get the… Ethical Skeptic referred to it as the “lientific method.” Hilarious if it weren’t so…

And you’ve got to laugh at all of this. First of all, it makes it more interesting. Get out of your head any idea of fixing the system. Let’s just fix each other and the people we know, people around us. And I think that’s the stuff of mass movements. I have but one experience with that, and that’s what I did, and a lot of people came around.

Thank you. This has got to be fun.

Sir.

[I just have a question]Yes.

[So, you’re talking about the P values and statistics and so forth, right? And you’re not throwing out the baby with the bathwater here, are you? And saying that statistics are not useful for doing medical studies?]Nope.

[You’re complaining that people are applying the statistics inappropriately or that the study design was such that the statistics don’t actually show anything.]Yes.

[But a P value in and of itself could be a useful way of thinking about whether there’s a signal there or not.]The doctor’s pointing out that the P value has legitimate use. And I think that we see it in quality control, for instance. It makes natural…

I got a great P-value story. I’ll give you an instance of a probability of the data leading directly to a conclusion on a hypothesis. My nail measuring experiment. My dad found out, have you heard this before?

Yeah, I mean, yeah, I thought it was a miracle. I couldn’t believe that he knew that I didn’t measure those f__ing nails.

Has everyone heard this? Yeah.

[Tell it again]My dad went to open house in the fifth grade and found out from the teacher, “He just says, ‘I don’t think he’s got a science project and they’re due. They’ve been working on it all year, and I don’t think Greg has one.'”

And my father didn’t say anything, which was really bad for me. You know, we get home, he still doesn’t say anything. In the morning, he doesn’t say anything. I think this is weird.

He comes home from work and he has a micrometer in a little wooden box and a bag of a thousand nails and a quadrille tablet. And we sit down, and he shows me how you measure a nail to within 10,000th of an inch using a vernier scale micrometer. So I go to my room and put down numbers one through a thousand and just start putting numbers approximate to — and some just got caught in scientific misconduct with this very way in a weight loss study recently, a grown-up — I was 10. Yeah, but I just started putting numbers that were proximate to, a little higher, a little lower, one the same, a little higher, lower, one the same, and I brought it back to him.

And five minutes into it, he’s like, “You didn’t measure them.” I go, “Yeah, sure, I did. All of them look, a thousand, measure them. There’s the numbers.” He says “No, you didn’t.” I go, “Yeah, I did. I swear I did. Promise I did.”

So he sends me back to my room and tells me to do it again. And I’m tripping, like I’m saying, I’m thinking that he must have been outside the window and looked in. So I pulled the blinds and I do it, but I don’t think he would. But you never know. I put a towel under the door. And I pulled out the army men and I did another one through a thousand and did the same thing and used a bunch of the numbers I’d done before. Why would you have to do the experiment twice, right?

And I brought it out there and, son of a gun, the same damn thing. “You didn’t.” “I did.” “You didn’t.” “I did.” And then we sat there together and measured them and got this perfect Gaussian distribution. It was gorgeous. “That’s what happens when you measure something.”

So yeah, I mean, that’s that kind of thing, right? Yeah, for sure, for sure. Yeah.

[When you think of how many things in biology fit a natural Gaussian distribution like that? That’s the problem, right? We can’t assume that all things in the world fit that]

[100%.]

The ad hocery by which we pick the test statistic is a problem, for sure. I don’t want to step on someone else’s expertise, but I think that the calling of it a surrogacy is fair. And I think that the overcertainty that generates from this approach is there for sure.

[Yeah, I would think that the problem that I observe in medicine anyway is that doctors aren’t really trained in statistics, and so they’ve been taught, “Here’s a tool, and you put your data in this way, you turn the crank, and something comes out.” And they’ve learned that you can do P hacking and so forth, right, to get what they want. It seems to me it’s more along those lines that the issue is, as opposed to the strength. And you raise a good point. How do we know that the tool is, you know, that what you’re looking at the data is actually a Gaussian distribution?]

Yep, important consideration. You had something, Roger?

[I just wondered, your criticism of John’s title, the word “false,” would you say that a good Bayesian must surrender the terms “true” and “false”?]

No, no, but you know, if I’m going to tell you that penicillin is effective on gram positive bacteria…

[Too long ago]

Yeah, you know, I’ll say that’s true. And you produce someone who’s allergic to it or resistant, it doesn’t change that. You know, Popper started with his ‘universal generalization.’ What was it? It wasn’t crows, was it swans, Ravens? What was it?

[It was swans.]

Swans.

Yeah. I’ve seen, we’ve played with inversions. All crows are black, kids who don’t know what a swan is, right?

Um, my father’s response to that, and I’ve not heard elsewhere, he’s says, “Whoa, hold on, bullshit.”

He says it’s more likely that no scientific assertion ever had that form. He goes, “Or let me put it this way, I’ve never known a scientist stupid enough to come forward with a universal generalization as a scientific model. That’s absurd.”

And true enough, we actually, at my local Jack in the Box in Prescott, just miles up the road, I was sitting there watching ravens pick through garbage, and there was a red one. Red almost in the color of your jacket. It was like Irish Settery kind of, or beautiful hair. But he was definitely red. And it was interesting, the other birds were kind of keeping their distance from them, you know, while they were eating garbage.

And I went into the local bird expert, and I had pictures, and he had pictures, and he knew they had some mutation. And it was funny because you read Popper and you think there’d be a huge excitement or refutation of something. No, actually, it’s a mutation.

[Just to reinforce because a lot of what I do is to look at the capture of the data to start with. And most of the games are played before any of the statistical stuff comes into action, if you like. So what you tend to find is that there are ways of gathering data such that it’s just a bit like your nail measuring experiment. Should really, there’s no point in doing anything if the data that’s going in is just absolute nonsense. And I think that’s where I’m most interested in what you’re thinking on that.]

You know, what you remind me very much of a legal concept, and I think it was Oliver Wendell Holmes and it was quoted — Where’s Dale? Where’s Mr. Saran? — It was quoted by our judge, Sammartino, in our case in terms of the perjury of the academics, and he said, “You can’t apply law to lies.”

Yeah, you’re just sunk at that point.

And what to do about a generalized dishonesty through our system? I don’t know. But if I’m going to do fake science, I’d sure rather hide it in the consensus variant. I watched the process by which scientists are purchased and studies are performed and/or buried.

[97% of scientists agree with you.]

My dad used to scream at the TV. He’d jump up and turn down the commercial every time. “There’s no voting in science!”

It’s the “nine out of ten dentists who…” you know, I mean, that was that got stopped every time.

We had Dale once. What did I tell you? We set Dale up with the Science Bear. Someone told him, “Yo, call Jeff and ask about…” What was it?

[We crank called him at night to get him wound up about P values]

[It’s exactly like what you’re talking about, like if you grab that on all coordinates, it disappears. It’s nonsense, you know, losing his mind. I was like, “Oh God, why did we do this?”]

40 minutes trying to get off the phone?

[Greg would say “Put on speaker, put on speaker”]

With the Science Bear. I miss him. I miss the Science Bear.

[I have one more question.]

Anything.

[So this distinction between true and false, or the use of those, is it fair to say that they don’t apply well to data? Not useful terms apply to data, but useful as applied to philosophical or logical claims.]

Yeah, let me tell you this. The most important things in the world will sit squarely outside of the realm of science. And my line was, if we’re going to have a town hall debate on free speech, where we resolve whether we’re going to continue with free speech or not, I’m going to come and I’m going to join in the debate, and I’m going to do my best to argue. And I’m going to bring a gun in case I lose the debate.

Okay?

The most important things in our world aren’t amenable to scientific analysis. And when I hear people talk about, “Well, you don’t know how hard it is in psychology, that we can’t do the experiments, and we don’t have the controlled variables,” and on and on and on, and I get it. And maybe that’s why literature’s done a better job than academia in dealing with psychological issues. In terms of shedding light, being a source of truth.

I think Murray Carpenter’s sharp on that, on King Lear in particular. It’s brilliant. There’s exposition of psychology there that you never would get out of the university. Insights, right? That’s harsh. I wish I could be Douglas Murray and talk about the rubbish degrees he’s got so little trouble with. Just, you know, and you ask him what they are and he’ll tell you.

What a character. I greatly enjoy him.

Epistemology Camp Series

Science Is Successful Prediction

By Greg Glassman

Watch

Mindless Statistics

By Gerd Gigerenzer

Watch

Intersections of Probability, Philosophy, And Physics

By Anton Garrett

Watch

Sunday Discussion, Part 1: Epistemology Camp

With Greg Glassman, Anton Garrett, Peter Coles, et al.

Watch

Sunday Discussion, Part 2: Epistemology Camp

With Dale Saran, Anton Garrett, Gerd Gigerenzer et al.

Watch

Sunday Discussion, Part 3: Epistemology Camp

With Greg Glassman, Dale Saran, Peter Coles, et al.

Watch

Greg Glassman founded CrossFit, a fitness revolution. Under Glassman’s leadership there were around 4 million CrossFitters, 300,000 CrossFit coaches and 15,000 physical locations, known as affiliates, where his prescribed methodology: constantly varied functional movements executed at high intensity, were practiced daily. CrossFit became known as the solution to the world’s greatest problem, chronic illness.

In 2002, he became the first person in exercise physiology to apply a scientific definition to the word fitness. As the son of an aerospace engineer, Glassman learned the principles of science at a young age. Through observations, experimentation, testing, and retesting, Glassman created a program that brought unprecedented results to his clients. He shared his methodology with the world through The CrossFit Journal and in-person seminars. Harvard Business School proclaimed that CrossFit was the world’s fastest growing business.

The business, which challenged conventional business models and financially upset the health and wellness industry, brought plenty of negative attention to Glassman and CrossFit. The company’s low carbohydrate nutrition prescription threatened the sugar industry and led to a series of lawsuits after a peer-reviewed journal falsified data claiming Glassman’s methodology caused injuries. A federal judge called it the biggest case of scientific misconduct and fraud she’d seen in all her years on the bench. After this experience Glassman developed a deep interest in the corruption of modern science for private interests. He launched CrossFit Health which mobilized 20,000 doctors who knew from their experiences with CrossFit that Glassman’s methodology prevented and cured chronic diseases. Glassman networked the doctors, exposed them to researchers in a variety of fields and encouraged them to work together and further support efforts to expose the problems in medicine and work together on preventative measures.

In 2020, Greg sold CrossFit and focused his attention on the broader issues in modern science. He’d learned from his experience in fitness that areas of study without definitions, without ways of measuring and replicating results are ripe for corruption and manipulation.

The Broken Science Initiative, aims to expose and equip anyone interested with the tools to protect themself from the ills of modern medicine and broken science at-large.

Support the Broken Science Initiative.

Subscribe today →

recent posts

And more evidence that victory isn’t defined by survival or quality of life

The brain is built on fat—so why are we afraid to eat it?

Q&A session with MetFix Head of Education Pete Shaw and Academy staff Karl Steadman