At a CrossFit affiliate gym in Boulder, Colorado in 2022, BSI Founder Greg Glassman explains his his definition of what science is, and what it isn’t.

Transcript

00:00:00] Hi. Hi. So all CrossFitters, right? Anyone? Not. That makes this easy. So I’m back… No. I never really went away. I I’ve been doing for the past two. Years in earnest what I’ve done for the past decade, and that is study how it is that a guy like me could have people like Zoe Holcomb and Thomas Seefried and Malcolm Kendrick and. Gary FET Key. And Tim Noakes and David Diamond and Tim Roche and all these scientists. Peter. Gosh, I’m leaving people out. But we had in the Santa Cruz, in my home, at the. Middle ones, at our derelict doctor club. Scientists. Researchers. Physicians. From around the world, each of whom had some vital message, vital in the sense of essential. Like like necessary for the optimal functioning of. The organism. People with an essential message. And yet that message was. Diametric or orthogonal to the mainstream view. They had taken heat for it being criticized. I mean. Imagine what it’s like to be a Zoe Malcolm or Malcolm, Malcolm, Kendrick and be told in The Guardian that your effect on the civilization has been like that of a war criminal. And this is long before COVID. And I,

I knew it was significant and I knew that something. Was wrong in science. There wouldn’t have been a CrossFit if there wouldn’t have been something wrong in science.

In fact. I’ve kind of come to see that I’m kind of a guy meant for for. Fucked up things like I don’t know what I’d do if fitness were perfect and everyone ate right. I don’t know what I’d have to I can’t imagine what I do. And I’ve I’ve always enjoyed looking around in the midst of mass hysteria or delusion and trying to get a sense of things. And and CrossFit was born out of that. And I’ve continued in that exact vein. And we’ve all known about broken science. For quite a. While. Can you hear me well enough? Sure. I had something back. And if none of you have explored broken science, I would suggest you do something as simple as put.

[00:02:18] Broken in science into Google or any other search. Engine. Look what comes up. It’s pretty fascinating. That how.Big an issue this is and it manifests as the replication crisis, reproducibility. Crisis, replicability crisis. A whole lot has been written on it. A whole lot has been discussed. And what I’ve done over the past two years is trying to. Try to learn more about it so that maybe instead of just having. These people. Over, as all of us sharing this. Understanding that there’s something tragically missing in the mainstream view, maybe there’s some takeaways. As to what that. Is. And I got to tell you, I don’t I don’t have an answer from any kind of societal perspective. Things are really, really bad.

And I’m sure hands, if you’ve noticed and I’m not. Going to get into covert or critical race theory. I’m not going to talk about any. Of that stuff. I’d like to do this without being polarizing. Any more polarizing than I naturally am. But we don’t have to get into the weeds on any of this. If I could just say as a show of hands, you notice that something has changed significantly. And for the worse in the past five or ten. Years. Right? Big hands up high. Let’s. No one’s coming after you. Yeah. And for those of you that haven’t seen that. Noah, what? Are you kidding? This is great. You know. Like the three year olds that are drawing stick figures with mass on them. Like, that’s the way it’s always been. But if you haven’t, if you don’t have some inkling of a sense of something. That’s gone awry. Then tune me out and come back later. But for those of you that do, I’m talking specifically to you. And I think at a kind of a high order view, I can I can shed. Some light on some some of this. I have very little hope for. Our society and our culture. And I wish I could come in, but that doesn’t press me. I’m not here. I’m not bummed.

[00:04:06] Out about it. I just. I’m just I can just tell you, I can’t find. Logical reason to be optimistic. I felt the. Same way about health, though, and fitness. But what I did know is that while not having this came to me. From a reporter, that. I’d been hit over and over and over again. Greg, you have to admit CrossFit is not for everyone. And one day it popped out of my mouth. It just came from nowhere. Because I’d been abused. By that for so many times because it’s yeah, I get that, you know. But what I said was this one time it just came out. You’re right. But it’s for anyone. And what is that anyone as opposed that everyman? Wow.You’ve got to be willing to show up. You got to be willing to discomfort yourself. You’ve got to come back tomorrow.

I mean, you got you got it’s going to be you. There’s I don’t have any information. I learn that now. I’m now I’m healthy. It’s not like that. You’re going to have to do something. And but amongst those people who engage, participate, maybe even have to suspend belief for a while just to see how it comes to show up Monday, show up Wednesday, show up Friday, three weeks later. In many cases, you understand now, you know. And so that’s what that any man is.

And so on the science front and Emily Kaplan here has been Emily was working for CrossFit HQ in public relations and as things got strange for me, she was a very, very capable defender of me and. And, and oversaw. Almost single handedly with a team of lawyers my departure and has stayed with me on this science front. And so she’s. My partner in studying broken science.

And what we’re doing is producing a curriculum of science studies, one that doesn’t make physicists laugh, one that is is consistent with How science is practiced, where it replicates as opposed To where it can’t.

And the goal is this: I’m not going to fix Messed up science. But I can do this. [00:06:04] I can protect any man, any woman, anyone from the from the the ravages, from the tyranny of shitty Science and its scientists. It’s not a real easy thing to do, but it is a pretty simple thing to do.

And we can just kind of. Start talking about that today. To give you some sense of. How it is that science went bad and what it means to be broken, to not replicate. Any questions before we get at anything about that. Comments, observations. It will help me if you give me some inputs. I get it in private. I get asked all the time. How I’m doing, let me tell you. So I’ve never been happier. So in terms of me, this is the best thing that ever happened to me. Getting to work on this for sake of family, friends. I got kids, I take them to school. We I just got back from Belize and we’re going to take. A sailing. Trip through the Mediterranean. I’m building a. House in. Scottsdale, remodeling another one on Coeur d’Alene. I mean, I. Couldn’t I’m doing exactly. What I would do if I were in heaven and got to call the shots. So Greg’s great. Yeah. Thank you. I’ve never. I’ve always been happy. I’ve never felt this good about anything. The stress is fundamentally gone, and I didn’t know what stress I was under. I worried about all of you, everyone I worried about every gym owner, every gym owner. And I do so much less. Now.

Let’s talk about What science is and what it Isn’t. I think more importantly than learning what it is, I think is perhaps learning what it isn’t. That might be easier and maybe more important.

Anyone want to take any stabs at that? Don’t hate to put people. On the spot, but. I’ve seen. This done before. It’s kind of an interesting thing to just especially with groups of big said look I bring my own markers. Science is isn’t any sense. Scientific objective. Yes, it is. It’s it’s it’s sort of sand repository.

Source and repository of man’s objective knowledge

[00:08:09] Of man’s objective knowledge. So let’s just start. That’s great. Yes. I’m sorry. For sure. Lack of curiosity. Hard to imagine that consistent. And that’s kind of on that sociological side. Psychological side, challengeable. You never don’t ask questions. Never. You know, the falsifiable was it Karl Popper’s demarcation and I would hold falsifiable as. A as a. I’m sensitive. No, I’m sympathetic. To the argument that it’s a requirement. For a meaningful proposition, that it be falsifiable, but as a demarcation as to what constitutes. Science or not, it’s been an abysmal failure. And part of what I have come to learn is that academic science in relying on Popper KUHN, Fire Up and Lakatos, the guys that David Stove calls the rationalist. There’s a lot of happening here, but one of the tragic turns that academic science made was using falsifiability as the demarcation mark to demarcate science from non-science. From science, when things can come, when you can falsify. When you cannot falsify something, we we move away from meaningful. Propositions. In science. But you know what areas are cute is meaningful and could be falsified. But it’s not a scientific assertion and that it lacks something else that will come to. But I’m not I’m not I’m not pooping on the falsification notion. But I’m telling. You, it is a. Criteria for for rationality, for. Meaningful discussion. And I do believe that Popper took that directly from the Vienna Circle and the logical positives, who he was trying to influence and desperately hope that they would take him in their ranks. And they never did. But that that kind of is. But I’m running with it. I’m just going to these that are working with what I’m looking for. I’m going to take objective right here, and then I’m going to kind of push you into the next piece here. It’s man’s objective source of knowledge. It’s both source. Isn’t that a brilliant read? That’s Crayola crayons, dry erase marker after all these years loyal to XPO. And it’s just it’s gone, right? It’s source and repository.So it’s where we get our objective knowledge and it’s and it’s where it sits and it sits siloed in models.

[00:10:39] And these models have four flavors depending on his gives away a lot, depending on their predictability.

On their quality or ability to make predictions graded on predictability.

And that’s conjecture or hypothesis, theory and law.

But this is this is important and this is the true demarcation, not falsification. And I’m. Talking about the classical demarcation of science. From non science. It the demarcation is predictability. And so we can sit here for. A long time and talk about the discipline of astronomy and astrology. But what turns out is that. Astronomy is contention or astrology is. Contention that Aries make for. Good girlfriends would find less predictive value than, say, an astronomer saying there’s going to be an eclipse of the moon in 463 days, 5 hours, 9 minutes and 12 seconds.

And if you look to the history of man, our quest for. Four. Prediction is really. Amazing, the things we have done. I’ll just toss some of these out at you. I had at one point wanted to memorize these because it would have been such a nice parlor trick and you’ll probably see in a video at some point where I am able to do that.

But the efforts that have been put into memory, into predicting the future include electromanci. That’s making birds take off and see which way they go. Tell you everything you need to know. Astrology, astro mansi aguri. Bazi biblio mansi. Cardamom. Cicero mansi chiro mansi chrono mansi clairvoyance si. Claro mansi cold reading. Consensus science. That’s my I put that in there.

Crystal romance ecstasy face reading, feng shui gastro mansi geomancy hirose PC Morarji Orari. Astrology hydro. Mansi eye chen cao sim lithium mansi. Modern science. That’s mine, too. That’s the one that actually predicts the future.

Molybdenum and sea naive. Ology necromancy nympho. Mansi Numerology. O’neill Morenci Unknown man see palmistry perilous. Astrology paper fortune teller. Pendulum this one the printer didn’t catch rab man sea casting scientism scribing spirit board that’s your Ouija board and shit, right? And Ted’s geography.

Common methods for fortune telling

Common methods used for fortune telling in Europe and the Americas include astromancy, horary astrology, pendulum reading, spirit board reading, tasseography (reading tea leaves in a cup), cartomancy (fortune telling with cards), tarot card reading, crystallomancy (reading of a crystal sphere), and chiromancy (palmistry, reading of the palms). The last three have traditional associations in the popular mind with the Roma and Sinti people. [WIKI]

Another form of fortune telling, sometimes called “reading” or “spiritual consultation”, does not rely on specific devices or methods, but rather the practitioner gives the client advice and predictions which are said to have come from spirits or in visions. [WIKI]

- Aeromancy: by interpreting atmospheric conditions.

- Alectromancy: by observation of a rooster pecking at grain.

- Aleuromancy: by flour.

- Astrology: by the movements of celestial bodies.

- Astromancy: by the stars.

- Augury: by the flight of birds.

- Bazi or four pillars: by hour, day, month, and year of birth.

- Bibliomancy: by books; frequently, but not always, religious texts.

- Cartomancy: by playing cards, tarot cards, or oracle cards.

- Ceromancy: by patterns in melting or dripping wax.

- Chiromancy: by the shape of the hands and lines in the palms.

- Chronomancy: by determination of lucky and unlucky days.

- Clairvoyance: by spiritual vision or inner sight.

- Cleromancy: by casting of lots, or casting bones or stones.

- Cold reading: by using visual and aural clues.

- Crystallomancy: by crystal ball also called scrying.

- Extispicy: by the entrails of animals.

- Face reading: by means of variations in face and head shape.

- Feng shui: by earthen harmony.

- Gastromancy: by stomach-based ventriloquism (historically).

- Geomancy: by markings in the ground, sand, earth, or soil.

- Haruspicy: by the livers of sacrificed animals.

- Horary astrology: the astrology of the time the question was asked.

- Hydromancy: by water.

- I Ching divination: by yarrow stalks or coins and the I Ching.

- Kau cim by means of numbered bamboo sticks shaken from a tube.

- Lithomancy: by stones or gems.

- Molybdomancy: by molten metal after dumped in cold water

- Naeviology: by moles, scars, or other bodily marks

- Necromancy: by the dead, or by spirits or souls of the dead.

- Nephomancy: by shapes of clouds.

- Numerology: by numbers.

- Oneiromancy: by dreams.

- Onomancy: by names.

- Palmistry: by lines and mounds on the hand.

- Parrot astrology: by parakeets picking up fortune cards

- Paper fortune teller: origami used in fortune-telling games.

- Pendulum reading: by the movements of a suspended object.

- Pyromancy: by gazing into fire.

- Rhabdomancy: divination by rods.

- Runecasting or Runic divination: by runes.

- Scrying: by looking at or into reflective objects.

- Spirit board: by planchette or talking board.

- Taromancy: by a form of cartomancy using tarot cards.

- Tasseography or tasseomancy: by tea leaves or coffee grounds.

And if you look up each of these, what you’ll find is that these were guaranteed methods in different cultures and times, some of them 1000 years old, that were believed proven ways to read the future, none of which were.

[00:13:21] And so we only have really one source of objective knowledge, and it is modern science. And it finds validation. How? Through predictive power, that’s it. Predictive power, which puts us squarely in the space of probability, theory, and induction.

Kind of running ahead of myself here. But what happened in the 1930s with Karl Popper and his mistaken to pick to pick falsification. Versus over predictive value. Was an affliction of academic science.

This isn’t Ellen’s problem. What’s happened is this is and this is the thesis that. Emily have not and I have now taken around the world. And what I’ve done is queried the best minds in philosophy of science. And of course, there are people that have come from the same tradition that I have. So it was pretty clear that I would be able to find their favor if I cast my my story and thought, right.

But our thesis is this: when science replaced predictive value with consensus as the determinant of a scientific model’s validity, science became nonsense. Largely what we did is we ended up with a corpus, a body of published research That won’t replicate. And boy, if That’s not broken, I don’t know What broken Is.

And the area is where. A. Neat little funny. I’m going to recommend a lot of Wikipedia articles and I couldn’t hate

Wikipedia. Any more than I know. I hate it more than anybody. Everything important they’ve got wrong. But it is a reasonable place to. Start an introduction and have a discussion. I would recommend that if you’re not familiar with the replication crisis, that you look there.

CrossFit | Gary Taubes: Postmodern Infection of Science and the Replication Crisis

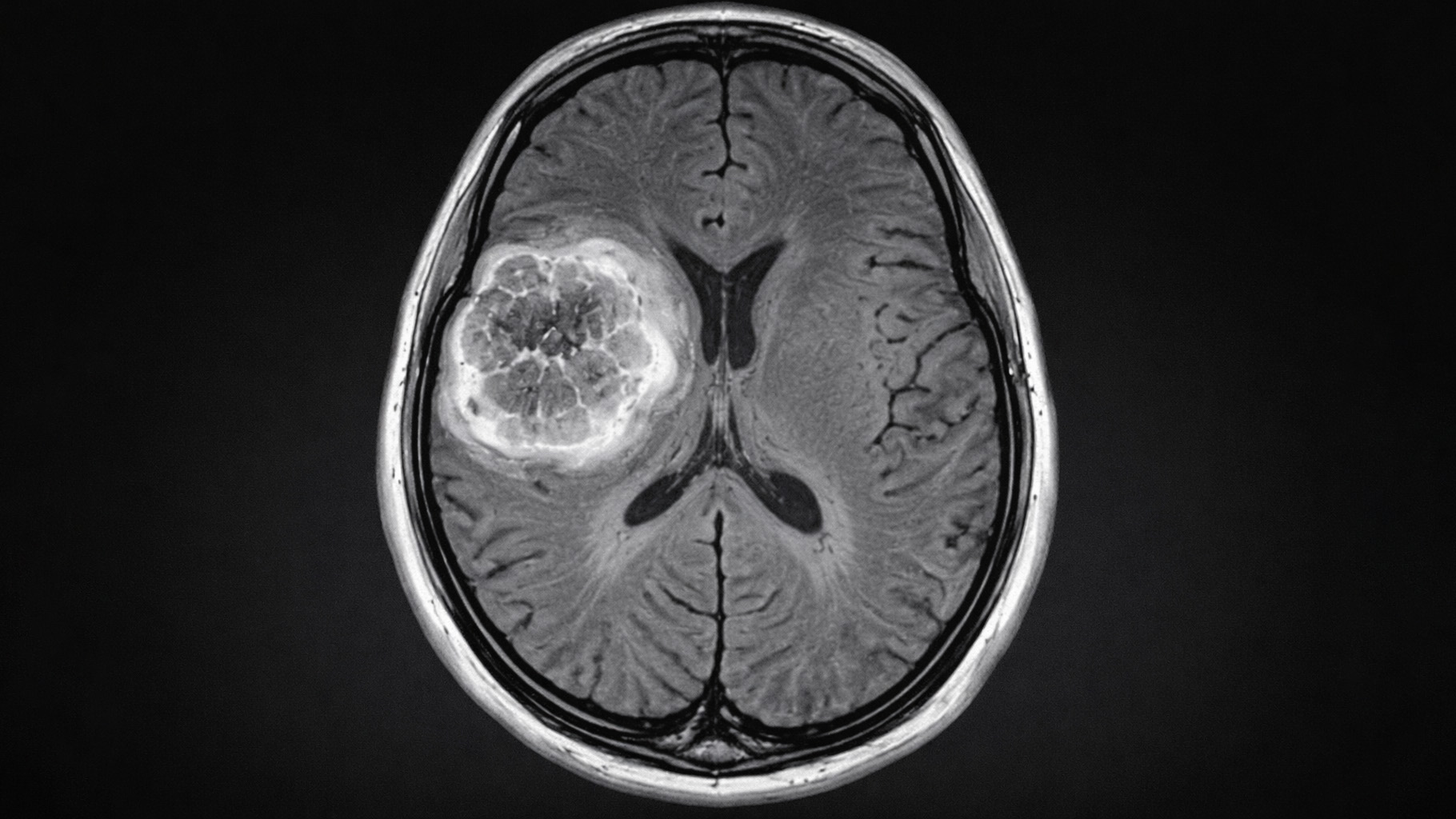

And look to see what fields, by their own admission, have a corpus of published. Science that will not replicate. It includes. Psychology. Economics, sociology, all of the outlandish stuff you might study at school for which your kid told you that’s where they’re going to spend the money. You’d be like, Oh, wow, shit, are you going to get a job? All of those. But tragically, sadly, it also impacts medicine. And one of the areas that has demonstrably been hit. [00:15:46] Hardest for which there is. A dramatic and very expensive. Multibillion dollar effort to demonstrate the replication era is in oncology.

And what happened is that a fellow named Glenn Begley at. Amgen, director of research there, had been queuing up clinical trials for cancer drugs and one after another, after another after another, they failed. We had Glenn Begley out to Santa Cruz for one.

Raise standards for preclinical cancer research

Of these ADCs. So this is someone. That was part of our part of our heritage and having him out from Australia to talk to us. And what they did was he decided that maybe the problem with these clinical trials because he has. A has a long 30 year record of bringing drugs to clinical. Trials and he knows about where you’re batting average should be. And here he’s. Finding no matter what they do, it doesn’t work. And so he became began to. Doubt. The preclinical science.

The science in which these clinical trials were motivated in oncology and hematology. They identified. 46 studies. That were benchmark. Landmark, and it turned out that 11 of them could would replicate. And the others would not $1,000,000,000 in a decade to figure that out. We don’t know to this day which ones replicated and which did not. One of them that did not replicate. Dr. Begley told. Us, and he did this in conjunction. With a guy named Ellis at UT Southwestern. Fascinating. In the aftermath of this, Begley gets a standing ovation when he appears in the audience and Ellis is escorted off and on campuses with security. But what we know is that is that fundamentally oncology, they get the oncologists in here. They just. Hate this fucking lecture. You may practice.

Oncology is a craft and do so. With the skills and the manners and the behaviors and the epistemic. Values of a scientist. But the underlying work is, is, is seriously flawed. It has a desperate, serious problem that doesn’t seem to be getting better.

One of the studies that wouldn’t replicate has been has. Been cited 5000 times since being discovered to be false. John Ioannidis, who is the most cited physician.

Why Most Published Research Findings Are False

[00:18:00] In all of medicine by far, and the ninth most cited scientist in all of peer reviewed literature. Wrote a piece that is the most cited bit of medical. Research of all, and it’s why most research findings are. False. And so if I go back to this pop Arian, falsification versus predictive value. Put a little piece of it up here versus predictive power. What we find here is that this is in academia. And that the predictive power one is more like. Industry. You might some of you already get a sense of things that it might be easier to have a theory in. Psychology or sociology that is patently false, your pet theory, and it hasn’t impacted your ability. To teach at the university.Can you imagine a theory on rocketry that was fundamentally flawed and trying to work with Elon and getting something to Mars?

Now, some would say. He has a distinct advantage in that the the the field in which he’s testing these things is. Has got the advantages, inherent advantages of easier to do experimentation that is without a doubt true.

But the problem is, is that when validation comes from agreement and really what I’m standing here telling you is that the argument from authority is bullshit.

Do you know how long that’s been known? But when someone gets in front of you and says, If you don’t believe in me. You’re not following science, or if you don’t follow me, you don’t believe. In science or anything that sounds anything like that. What we need to do is we need to train ourselves collectively where. That inspires a laugh. Because that is clearly coming from someone who does. Not understand what science is.

Can you be a scientist and Not know what science is? Oddly enough, you can. And I’ve thought of other. Examples of things you could be. And not understand. It’s actually it actually can happen. It actually can happen. This is activist. And this is in activist.

These people have the p value fiasco. These do not. In fact, one of the fellows that were drawn close to that I know Aaron knows has with a guy named Trafimow suggested an alternative to null hypothesis testing that involves predictive power.

Trafimow, 2009 – No justification for computing p values

[00:20:41] Someone was talking. About. It was Ioannidis and he was talking about it would. Be neat to see. The IHME algorithms that created their. Models. That maybe then we could. Better understand the math if we could see how these models were built. I have no interest in the models. I would.

I would pay to not see how they were built. Why? Because they. Have no. Scientific value or merits until they’ve predicted something successfully. Don’t tell me about your race car when the fucking thing won’t start.

If you can’t make it go. I don’t want to. I don’t want to hear about the theory underlying the thing. Make it go 260. Miles an hour. Now I’m dying to hear. I want to know how you did that. What’s in that motor? We have been we have been listening to people feeding us all kinds of theories. And those are. Models, theories and model. It’s just an if then proposition. Right.

We’ve been fed a lot of models that have. Proven nothing, shown nothing, demonstrated no predictive value. That’s just a. Part of what we want to address here. There’s so much that can be talked about. It turns out that these two sides that the that the academic and. The industrial and we might as well just light it and call it that is a generalization.

There are other differences that are that are fascinating about them in terms of.

Models: Academic vs industry:

| Academic | Industrial |

|---|---|

| Falsification | Predictive power |

| Won’t replicate | Must replicate |

| Deduction | Induction |

| Use p-values | Don’t use p-values |

Won’t replicate. And must replicate.

What are the differences, Emily? What’s from the list? It’s a long one. Questions. Anything on this? Is this interesting? People don’t trust. Meaning until these conversations and they’re operating on. Don’t. You don’t know how to correct or. Yeah. Where you find. The replication crisis and where we find scientific misconduct.

It’s associated with peer review. It’s a problem in peer review. In what is peer review?

Well, that’s the process by which the NCA said that cross fitters, that you were injuring people. That was an amazing experience to. Firsthand. See the peer review process from the emails of the peer reviewers taken by court order from a forensic examination of their servers.

[00:23:57] But actually see. How a guy say, I’m not going to publish this. If people aren’t getting hurt and we don’t have injuries, then it can’t be published. So like here we got some injuries. Yeah, there you go. That’s the way to do it. You’ve got peer reviewed. To defame your efforts. And this was in the this was the this is the. The the the highest level of academic training. Ask a question. Please. Do you think it’s. Probably. Here what they want to. Part of this. A wonderful stew of both. And it brings you here. There’s a definition of. Corruption, like a corrupted file in a in some code. So you lose a bit of some piece in a string of code and the file doesn’t it doesn’t work now. Right. It’s been corrupted. And so it’s something altered or missing that affects the the performance of something its function. There’s that corruption. Then there’s taking the money and running, looking both ways. Right. These go hand in hand. It’s amazing. Think of how. Hard it is for for think of how, how, how with what. Ease you can. Alter a consensus versus get an outcome of an experiment to predict something different. It’s really easier to just pay someone to change their mind. And in fact. When a guy is about to publish a paper and it’s been approved for peer review and I pay. Her to change her opinion. On it and it gets it gets peer reviewed. It’s still it’s it’s satisfying the consensus requirement. It’s still new. The consensus you just bought a new one. And powers that be in pharma, in food and beverage have founded child’s play to purchase just about any science you want and get it published in peer review journals. So it’s a it’s a wonderful stew of both.And my draw into this, I actually got in from the deliberate scientific misconduct aim and ahead of coming to face with the fact that there are people, the people that get a. Wee and. Crow that they found cause, you know, small p value and they think that must be in fact powerful evidence of a causal factor.

[00:26:34] And it is not at all, not in the least question.Can you explain the difference between an inductive framework? Bayesian framework. Yeah. You know, and I’ve gotten a pretty good effort to, to not use the, the Bayes term.

But if asked if I’m a Bayesian by someone looking at me, I’m going to say, yes, I am. I have some problems with subjective Bayesian priors and all that kind of thing.

But the. The people that. That have this figured out come from that side of things. What happened was that with falsification. What Popper was trying to do was put put science on a deductive groundwork. He wanted the certainty of deductive logic and and went off in that direction.

The truth of the matter is. Is that. Scientific assertions that models find validation from. Their predictive power.

And that is a that is a percent. It is probability and it is. The space of induction. And one leads directly to a lack of. Replication and no growth. And the other thing too is very few. Scientists, except for some theoretical. Physicists, only briefly took the whole. Falsification. You talk to this thing seriously. But what happened. When we went down this.

Road is that the scientists from Laplace. To Jeffreys to. Shannon Cox. Brilliant minds in. Physics and communications theory in physics.

What what happened. Was that the. Inductive probability or probability theory that they developed, the logic of probability got suppressed and ignored for a long time. And we live right now in an area where it has been unleashed. And these kids, the bazillions, have come forward. And with modern computing methods. The case has been. Made very, very strongly for. Those of you that have. Tolerance for. This kind of thing in interest. Etta

Jaynes is a physicist who published in. 2003 Probability Theory The Logic of Science. And I tell you what. The number of scientists. In genetics, in A.I. and radar systems in in all kinds of advanced. Computing fields that hold. James up as a hero is really interesting.

Probability Theory: The Logic of Science

[00:29:07] You really get a who’s who of the smartest scientists on earth by finding who it is. James died in 98. His book was written in 2003. Just to give you a sense of the kind of impact. He’s had, the gentleman. That finished the book. And put it together and publish published in 2003, a guy named Larry Bread Horse, who we’ve. Had some these guys. It was some of the most challenging conversations you could ever have.Is a physicist employed. By Washington University, Saint Louis Medical School, Department of Radiology. And he has. Written code. Using methods. That recognize the inductive probability of inductive logic or inductive probability. He’s written code that has passed a bunch of states, radiology state boards saying these things can this code can read MRIs, X-rays and sonograms, 1000 of them in 3 seconds with uncanny accuracy. And so these guys are really making some progress. Using the very methods that in academic. Medicine, in academic science studies we find aren’t accepted. Let me tell you one real. Important difference. Here. This side. Wants to talk about the. Probability of a hypothesis given. The the data, and that’s what looks like. P parentheses. H Straight line. Dx That raises the probability of. The hypothesis versus the data.

These guys tell you that you need to only look at the probability of the data versus the hypotheses, and that is the only thing that makes sense and. That this can never be done at all. Once you talk about the impossibility of a hypothesis that of a probability, you’re no longer able to admit that a theory finds validation in its predictive value.

And it’s as wrong a turn as a turn could be made wrong. It’s like getting uptight 40 and making a right instead of left and expect them to see. Flagstaff and instead. Find in other cities. I mean, it is just that obvious a bad turn. What I find really compelling is that where science works beautifully. I’ll use Ellen again, where people are doing things, making discovery, advancing. Technology, driving science rapidly. There’s very little interest or discussion in philosophy of science where science is moribund, stuck, won’t replicate it, ridden with with thieves and miscreants.

[00:31:37] There’s an enormous focus on philosophy of science, and it’s dead wrong.

And so currently there isn’t a science studies that. You don’t find at a. University. And it’s all of this and I’ll put their. Names up here because they were they were dead wrong and had horrific negative influence. Popper Coon. Lakatos and Fire. Robert. I. These are what. David Stove called the rationalists. And if you have interest in this, I couldn’t. Recommend David Stove enough. Australian philosopher who wrote five books each with a very different three are. Really the same book.

Two with an entirely different approach to looking at the grounding of induction and the grounding of science. But he’s written extensively on these four and. The academic of science studies is entirely of that paper and KUHN Lakatos firearm and variant. It wants to know about only about the probability of data given hypothesis. These are the frequentist. This is what gets you to p values. This is what gets you to over certainty.

These people don’t mean the same thing as the others. About random, about certainty, about chance, about probability. Fascinating thing to dig into the weeds and just look. At what the. Meanings of probability are. This is. A kind of a rough thing for people, and I probably didn’t want to do. This here today. But on this side of things, it’s actually believed that the that the. Come on up one through six and even number the diocese that that is that probability is a feature of the dice that it’s that that those numbers are somehow baked into the structure of that thing. Guys on on the other side my side for us probability reflects the limits of your understanding, which makes it sound subjective, but it really isn’t. It’s pretty much a fascinating thing. I’ll give you an example. I flip a coin and I ask, what are the heads? What are the. Chances that it’s heads? And you’re going to tell me, what’s that 50%? And that is exactly. The right answer.

Look at me. I’m going to tell you, I, I, I have a one.

[00:34:05] Right. You believe that? I mean, you saw me. Look at it. What’s the difference? It’s my knowledge. It doesn’t sit in the coin. Uncertainties in our heads. Not in the nature, not in the universe. Sketching some fascinating turf quickly. But it’s very, very interesting just how prominent this divide is in thinking.

My side is a. Minority for sure. Only in the sense that I’d say the most PhD’s employed. In science are employed by industry. But when we talk about the philosophy of science, it all sits in academia. But the the problem we have is that our. Kids, I think I think it’s a mistake to teach. Someone in the seventh grade about the. Solar system and. Get the balls out and spray paint them and put them on a coat hangers. Right. And then we’ll spend a week or two and with the periodic chart. And then we’re going to talk about photosynthesis and contrast that with respiration for for. Developing ATP, the. Currency of all bio energetics.

And that kind of a survey thing where we’ve got this view that science is a bunch of trivial facts that we’d put in. A bucket that may come up in Trivial Pursuit someday. Right. And maybe you can accumulate enough of those facts to end up being a scientist. I think that’s a grave mistake.

I think what we need to do is first teach kids. What science is and what it isn’t. And so I want to get that answer that it’s source and repository of man’s objective knowledge.

That it is that it’s that this. This knowledge silos in models these models have for levels of of of of graded appreciation based on their predictive value, etc.. When I was a kid and I had great upbringing. In this, we’d hear that nine out of ten dentists. Recommended my dad to jump up when before remote control. Turn the TV down. And tell me this bullshit.

There’s no voting in. Science and it doesn’t matter one whit what nine out of ten dentists believe. And in fact, he wants to hear about the one dentist because it’s the one dentist that’s going. [00:36:13] To have the. Revolution, the future, the advances. You’re always listening to the one in ten, not the nine and ten. I grew up with that. And it was very, very powerful.

I think there’s a way to codify that simply and. Some really elegant lessons and share that with the world. I plan on doing that. I mean, a neat position. I’ve got the time. I’ve got the friends I’ve already run to. The people that if they said, this is. Bullshit, I would be like, Oh, fuck, I better look at this again. Now. I’ve already gotten their approval and gotten the attaboys and the encouragement. I’ve got the. Resources, so I’m going to do this. I can’t be stopped, which I love, things like that. And I and I enjoy sharing it with you because. Whether you know it or not, we’ve been here before. On the health and fitness front. We stood up to a to to a consensus that was patently wrong. Dead wrong. Old ladies shouldn’t squat. Holy cow. Yes, they should squat. It’s an emergency that they squat. Right. We have this, you know, this margin of decrepitude and it’s moving in or moving out or holding still. Where are you? And we’ve got to push that and keep that at bay. Right. This level one stuff. So I came here today to share with you. I’m super excited. About broken science and I’m going to be redoing. These DDC like things and bringing other people around. One of these. People that I didn’t plan on talking about today but was at lunch is Jay Bhattacharya, who fascinatingly is a friend of Aaron Gans. He’s he’s written extensively on it. He says the. Greatest driver of inequality in the past 50 years has been the lockdowns. Think about that and think about me. You think I know the difference between. Sitting by my pool in Scottsdale during the lockdown? Let’s get some Chinese food from GrubHub versus sitting in Camden. New Jersey, in a tenement in the 14th floor with the windows boarded up with guards outside to make sure people don’t leave the building.

[00:38:19] What this has done for people of color, for disadvantaged. For the downtrodden is unthinkable. Unthinkable. It resulted in race riots. Did anyone predict those race riots? Emily. I did. Long before the BLM set that this ends in race riots. Where do I get that from? The CDC. They published a study. They said that this always ends in race. Riots, regardless of. When where quarantine, mass quarantine disproportionately affects marginalized people. And you get race riots. Sure enough. We’re not going to change the world, but we can change each other and we can change our friends. Right. And boy, that feels a lot. Like the world changing. But I tell you right now, I see in the mainstream significant efforts. To undo everything you’ve done. You think the you think the low fat thing is gone, the anti meat thing. You think that’s gone? It’s not. It’s not. Thanks for your. Ear. I didn’t want to. Turn this into. We could prove Bayes theorem. No. I’m having a. Blast. And you guys were. Were were. My inspiration. I,I got to put something forward in the world that you demonstrated the value of it. It gave purpose To my life. It’s made a lot Of people healthier. And I think we’re Going to do the same thing again, just on a kind of an upstream level.

Questions. How are you? It’s good to see you, sir. My question is about there’s a technological technological solution to something like representations, crisis and consensus science. Does that put us in. A worst scenario or are. The end? You know, I don’t I don’t know who’s. Ready for it. But if you were to look. At my recent pile of crap here. One of the first people to speak. So clearly on the p value mess was a guy named Trafimow. And I believe that it. Was his and it was actually. In a journal of sociology, of experimental psychology, which was like, wow. Sadly, my bias against that kind of academic efforts in science kept me from reading the original piece.

[00:41:04] But I knew that he had. Written forcefully and critically of P values and attributed that to replication. I didn’t know how brilliantly he had written, but I also, in going back and reading. His piece. From from 2015, I think it was, I saw where the American Statistical Association felt compelled to finally say something. It’s America’s oldest scientific organization. And in 2016, I think it was, they asked that we no longer use P values for confidence intervals in science work done. And he was the first of the big editors trap them out to insist that they would no longer accept those.And then he, with a fellow named Briggs, written a thing called the replacement for hypothesis testing. And this was published in structural changes in their econometric modeling, in Springer’s studies in computational intelligence.

The Replacement for Hypothesis Testing

I brought that here in case someone asked for that. But this is a brilliant offering, and I have no doubt it works. I mean, I went looking for it, assuming someone had done it and I found it, which is kind of an odd kind of bias in your in your research. But no one needs to worry because it has no chance of being implemented. What it would do would suggest throwing out the corpus of academic science and starting over. And the truth is, is that the over certainty that P values provide. Us. Is is a boon to academic science.

You know, we’re making scientists hand over fist and the student loans and man, it’s it’s it’s ugly. James. I don’t know I don’t know what to do. But I do know that if if. This is the. Kind of solution that would be needed, we’re academia open to the solution. And so this is one of these things.

Kind of like goofy ravines, scarves, work where you won’t find objections or criticisms of this. What the opposition has found it best to do. Is don’t. Bring that up. And that’s where we’re with that, with that. But I’d love you to take a look at that. It’s it’s in and of itself, a brilliant work. It’s it’s got just a wonderful title here. It’s a replacement for hypothesis testing.

The Replacement for Hypothesis Testing

[00:43:18] And it was published by Springer in their publication Structural Changes in their Economic Econometric Modeling. But if you were to put in a trap memo, tray fi, M.W. and Briggs and the replacement for hypothesis testing, you’ll find it. And if that doesn’t come up right now, I’ll just give you mine and I’ll find another one. But it’s it’s it’s a it’s. A wonderful read. And the details in which he gets into.What is wrong with null hypothesis testing and where it’s led us astray. He sums it up. It’s a whip and you cluck, of course. So you get that .0.05 and therefore it causes A, causes B. And it’s just it’s just. Absolutely not right. You will not get from there to there that way, not logically.

Now, here’s what’s interesting. There is there is research that will replicate and is still false. And that it doesn’t imply what’s assumed to be implying. Even though you can do the experiment and get the same results, where the error comes is in in in validation.

And this is a point I might have made earlier. We talk about a demarcation. Between science and non science, and I’m telling you it’s predictive value.

So if you want to know astrology versus astronomy. And I actually. Watched my father in the seventies in front of an auditorium. Full of physicists. Electrical engineers, advanced scientists, all employed by Hughes aircraft. He asked them to differentiate astronomy and astrology. You know, I asked them to to give the demarcation for what it is that makes science give a definition. And he was at the board for about a half hour. And in. The end, he says. Your definitions are beautiful. Explore the universe, learn new things. You know, all the all the good things that you we’d all hope would come of science. But he said in the end that none of these will differentiate astronomy from astrology. And so the essential demarcation is predictive value. The example is given if I have a cardboard box and it’s got and it plugs into the wall and got rabbit ears in an exhaust pipe. So this is like burning gas and plugged in all this shit.

[00:45:29] And I kick it and it says 50 miles east to Barcelona in 75 days. 3 hours and 2 minutes, an earthquake of magnitude 6.5. And if that happens, what my audience is in the box is doing science. It’s not electromagnetic. It’s not the Ouija board. It’s not room casting right now. I don’t know how the technology isn’t available to me, but I wouldn’t expect to tear the box open and find a goblin or a mermaid or Harry Potter.If it indeed it works, we’d expect you to be working on first principles. He used that example because in his. Field there wasn’t. He was a rocket scientist. He built missile. Systems. And you never told anyone how your missile worked? None. Your business. You didn’t peer review it. You would delay. Patents as long as you could so. No one else. Would take them.

And there was a sad moment. When you’d win the contract, what you’d have to do if you got the contract second place. So they’d actually want fly drones by and you’d have to shoot at them with missiles. And it was pretty clear you’re missiles good mines better, right? I get the contract. What happens. Is second. Place gets the production. Contract. And the development contract. That person then has to share all their secrets with the other side. And he said they have blown gigs before to hide a technology.

So let’s don’t use. Our latest target ID device technology because we’re not ready to give it up. We want to develop it in this other program. And so it was a very different thing than. Peer. Review, but in the end it had no value. If it didn’t produce, it had to have predictive value, it had to have predictive value.

There was something else this was getting me into that I said I should have mentioned Emily. And there’s another piece here. It’s not just the demarcation between science and non science, but we need to delineate between method and validation.

And this is really, really important because the guys who have everything messed up, the scientism that they practice, they explain, they go in great detail about their methodology.

[00:47:39] And I tell you something, there’s no amount of method that creates value for science.It may or may not. The science is value at the moment of validation, and. Validation exists entirely independent of method.

And so whether the the theory comes from inspiration or perspiration, it still has its validity in its predictive value. And so if I dream e squared, it’s every bit as is is real. It has the same scientific validity, dreaming it as opposed. To deriving it.

That didn’t happen. But I could pick some other example that could have been easily derived versus versus just demonstrated. Questions. Sir. Where is where is the focus on identifying the source or the agenda? I guess it’s kind of a loaded question.

I guess the the motive behind the bad science, I guess we’ll have different fields, obviously, nutrition, sports, you know, fitness. But is there a specific direction being toward either an individual or group behind the motive, behind perpetrating the.

Well, you know, I keep explaining that, in fact, it was. My crude observation is everything is wrong, is wrong on purpose. And then later for Russ Green and I was we’ve only found corruption in the places we’ve looked. You know, like anywhere we see it.

And I remember things like tripping that. I was long, I was a charter subscriber. I got a I got to get subscription years ago to Outside magazine. And there were only two things I really knew anything about, and it was cycling. And fitness. And they were always wrong on those.

And so later I came to know Lindsey Ja, who worked there and she said, You know, we get everything wrong, Greg. The skiing, the climbing, it’s all wrong. Like the people that. Cycle get VeloNews, the people in ski get this and. Are outsiders for people that have all this shit in their garage and don’t do it. And the book goes to the coffee table and. Really doesn’t matter what we write.

Oh, okay. That helps, you know, like, wow. And that’s another one. Of these kind of wrong is. Wrong on purpose. But when I told Jeff Kane.

[00:49:57] This, I remember Jeff, he was CrossFit CEO before Dave. I said, all this wrong is wrong on purpose and is in his retort was, we don’t have a health care system, we have a disease economy, and an outbreak. Of wellness. Could bring the whole thing crumbling down like, fuck, that’s that’s that’s some depressing thoughts.Except it makes me smile and laugh. But it helps because, you know, I’d rather things be shitty than be fooled.

You know what I mean? I don’t. I don’t. I’ll take the facts as they lie. I just want to know what’s what’s really going on and. That is what’s really going on.

And so. Whether it’s an open border or or a cancer. That doesn’t care, there’s someone who’s like, wait a minute, this is how good I like it the way it is right now. And the amount of vested interest there is phenomenal. Phenomenal. Brad. Brad Shirakawa, some of you may remember him, good friend of Rob Wolf and I way back. He was sitting in on a he worked for. A small pharma star startup in San Diego and he was awaiting a conference call to start. And the CEO of. Pfizer, I believe it was. Was talking about he’d. Gone on. Atkins and his cholesterol had plummeted. He’s a healthy and and Brad’s listeners they don’t. Know they’re on and they’re just making this small talk around the room and. They’re seemingly no realization that like wow. I mean, this is there’s. $1,000,000,000,000 worth of. Medicine. You’re unraveling right there in your own personal. Admission. You know, it’s a weird world. I’m not answering your question, but I think maybe I am in a world I’m well aware of it. But what you need to do is just give you an example of what I saw. I watched his Seattle dying. And when they finally got down to the numbers, have. You seen that? It was an ABC News piece. And like rhetorical question dying. It’s dead right. It was so bad. But what I found is that if you take the numbers that the that the.

[00:51:57] People trying to remedy the problem claim. And you look at the amount that is minted spending on it, they’re. Spending about 350,000. A head annually on the on the homeless and sick man. I could you could give you could set someone up pretty good for. 350 grand a year. Right. And so I thought about it a minute and I was like, I know what’s going on. Someone’s getting rich. So as Jeff, to find out for me, like, where is. The industry here? And there were three agencies, two. In particular siphoned off to tens of billions of dollars in administering to the homeless. For medicine and outreach and transportation. Big budgets, everything all of it. Doing nothing except potentially. Exacerbating the problem. And maybe I’m getting political here, but I got to tell you, it’s really a sweet thing when you can get close to government and get funded. Heavily to address a problem when in fact, what you’re doing is making it worse. You can go back every year and see you need more. I mean, it is really a good gig and those gigs are hard to get and the people that get. Them do not let go of them and hold on with dear life. And they ended up with politicians in their pockets. And we just talk about Google when we were in D.C. trying to navigate things, you know, I knew what it cost us to get the occupational licensure bill revoked in. D.c. And so. When I found out that 14 states in 23 occasions over 11 years had been lobbying with legislation to criminalize or restrict heavily what it is that you. Do. It didn’t take the Podesta folks. Too long to come up with. That’s $103,550 effort. But the people lobbying these things, the CSM. And the SCA, they don’t have 10 million, much less 150 million. Where is that money come from? So what do you do? You look to sponsors. And who are their sponsors? Well, it’s Coke and Pepsi, gold and platinum. Sponsors. Would they help them. Or if I don’t know who else would you know, you know, someone that would really thinks it’d be cool if if we could silence trainers on the subject. [00:53:52] And that’s what that was about. So you dig in and you look and you kind of learn.The lay of the land, and usually you can find out who’s who’s benefiting from this, how it how it works to their advantage.

So how do we break away from the influence then and make it more open?

So. Because right now a lot of these studies are independent. I like academia and brands like investors. So I think that direction that allows them to do that in an open source environment. I you know what this is? You’re asking about a. System fix again. And I always come up short on system fixes. Like I, I don’t think we corrected fitness, but I think that we corrected fitness in the minds of each client that came through the door. And I don’t know that revolutions aren’t fomented when individual at a time and no one really ever had a plan for everybody but a plan for anybody. I don’t know. And I wish I did. And I recognized the importance of your question, and I feel short. Not being able to answer it. But I’ll go back to fitness again. I never thought for a minute that there weren’t going to be fat people because of us.

But I’ll tell you what, man, you know, you get you give me a fat girl and I’ll make her a thin girl. I know I can do that. And I could probably do it with enough enthusiasm and. Entertainment. Value that she would become a wonderful spokesman for the cause. And maybe that’s what we need.

But what I’m going to do is just start with trying to talk to anybody who. Will listen, increasingly refine the message. I think that we can put this out. Did anyone see no safe spaces. With a Prager and Corolla?

I highly recommend it. It’s really neat because you’re listening. You’re listening for. An hour having the the importance of free speech. Explain to you. And here’s the part that seems to be really a. Challenge for some. Younger people today that that necessarily entails you hearing some things.

[00:55:46] You don’t want to hear. That. The importance of free. Speech will necessitate that that everything you want to hear. Maybe heard and some things you don’t want to hear may also be heard. And that’s. Okay. We actually have to teach the new generation that. And I think I think we’re here. I think we’re exactly there. We’ve got to I’ve got to insulate some of us from the tyrannies of this shitty science.Being peer reviewed means nothing. Nothing. We have to tell clinicians this. I don’t care what the data says.

What are you seeing in your practice? What I’m seeing, my practice doesn’t work. Well, then that means it doesn’t work.

Thing how weird it was for me. I had I had the Air Force send me 30 young men. For a pair of rescue training. They were about to go to Lackland. They were in in Doc just entering into the pipeline. They go off. To Lackland and all 30 get through. In a program with a 70% washout rate. And the lead kid, Josh Webster, who many. Of you may know, Josh Webster is the undergrad in a program that washes out. 70%. We had 30 kids. 100% go through. I got called by an Air Force doc and he was really excited to work with me from Colorado Springs, from the academy. And what he’d like to do is validate my methods. Fuck. What are we trying to do here? I think we’re making war fighters and they just a whole bunch of them got through your program where you haven’t seen before what’s going to happen in the test tube? I was a complete loss. I don’t think that steps necessary here. Maybe for your own head. Maybe there’s some something we do. We God knows we sent games. Athletes up to Pepperdine. Get poked and prodded and looked. At and examined. I never heard of anything came of that, did you, Dave? Question. Please.

What does? Widespread predictive inductive model look like in medicine. Like I get hypothesis testing passes or it fails or whatever.

[00:58:01] But like what? What’s the ideal state? I can’t I can’t even begin to. Think about that without wondering what are the political chances of. That happening. And man, I can’t see it right now. I’ve got I’ve got the best minds in medicine kind of hanging low. I mean, like this crew, it it it. Stanford is shocked what they’ve been through. Dr. Bhattacharya was explaining to me that he he thought that what they’d been doing was science all along. But what he’s discovered is that he was conducting research that came to conclusions that no one had published. And so was a rider. Was I wrong or was I. Approved because I’m doing the same thing I was and now I’m a bad guy. And that that’s an amazing thing. What a hard thing to come to terms with.I wouldn’t even venture a guess is how to how to fuck medicine. And and when I and when I talk with physicians, it’s more on the level of them coming to terms with the lack of reliability in the structures that they’ve depended on for for information.

And I think we’re still in that phase, you know, and so I would cast a skeptical ear and eye to everything. And look for. Confirmation in in a clinical or empirical setting and make my decisions from there. How are you, kid? Good. I wish I had more answers. And the questions where I’m always at a loss, too, is what do we do next? I’ve got no idea. I really don’t. I’m not I’m not overly hopeful, but I don’t think there’s anyone here that couldn’t orient themselves within this this whole. Thing and. Come to some conclusions that look very much like what I’ve come to. I’m sure. For the relationship between broken money. Money is a leading indicator of. A leading factor. For sure. For sure. And I also thought it was interesting, you know, Russ Green and I went into. Senator Blumenthal’s office and met with him and staff. And we said that that the PubMed abstracts you have to cross the paywall before you get to see that the thing was paid for by Coca-Cola.

[01:00:45] And he goes, No. We go, Yeah, we pulled it up. You know, look, here’s what you see. Studies show that sugar is good for you and you pay the money and no one does because they just. Well, here’s a study. He says it’s good for you. And you pay the money and you. Get on the other side and you see, oh, you know, sponsored by the American Beverage Association. And it was just. In one. Sitting there. He made a call to NIH and got that put on to the that disclosure put on to the abstract side. And within two days, we were seeing them look, it says over here that this was paid for by soda. You’d hope that makes a difference. I don’t have. A whole bag of those. Kinds of things. That one came as a surprise. I wish we had gone into Blumenthal’s office three years. Earlier and asked for this, but it often seems that way in the military, too. There’s someone that can pick up a phone call, make something happen. I leave it to you good people to to be that person, you know. But I would like to get his past the point of thinking that that nine out of ten dentists are right and one out of ten is wrong, and that there’s anyone that can get in front of you and say that if you don’t believe in me, you’re not following science and you shouldn’t be following the warnings or the threats of any model that hasn’t predicted something novel and important prior. Right. And these might be harder lessons to learn. Maybe they’re more dramatic lessons, but I think. Philosophically and logically, they’re pretty dramatic.I also want to leave you this. So I’ve got we’ve we’ve what some of this is required is that we churn out a definition of modern science. And key to doing that was the understanding that science is an extension of language, logic and mathematics. And so if science requires that two plus two before or is it a small. P value is is is. Evidence of a causal. Relationship rather than just a correlate to one potentially. [01:02:43] Or. If terms get changed in there, meaning we have plasticity to terminology. We’ve got a language fault here.

Like COVID in New York meant that you’re a first responder, didn’t come to work, and it meant somewhere else that they spun this PCR 60 cycles and found and found the promised land.

So when we have fuzzy. Definitions, shitty math. Or or logical problems, how about this?

I, I know of several studies where the problem in the causal. Relationship is that the causal factor seems to be lagging, lazy. Lagging the effect. And so you want to say if it’s going to be a cause, it needs to happen first. Right. And you have it right when you find problems in language, logic or math to say that the science is. No good and I’m not going to pay attention to it and that’s pretty liberating. It is amazing how often you’ll find that that’s exactly the case.

This is all hard to do.

Is there an example of good side? Yeah. Lots of them. Lots of them. Yeah. I’ll tell you one, the. People that used Bayesian methods and. And supercomputers to finally resolve that the pig gets the swine flu and evolves through the pig, goes to the chickens or ducks, takes a form that is deadly to manage passed us. We were never going to figure that out with without computers and I it was it was an amazing thing what had happened.

But in the areas of advanced genomics, what’s being done with computers and induction right now and it’s brilliant. These are the same people. That are getting computers to read MRIs and to take out-of-focus photographs and put them in focus. How is that for a for a party trick?

Now, there’s a there’s a lot of good science going on, but it does tend to sit around industry and technology.

And I wish this weren’t true, but Google isn’t confused by much of this, even where they’re probably complicit on the bad science there. There’s super smart on the on the side of of inductive inference, inductive probability and what’s working. I know that because they’ve hired all the guys we like, like Jake Van Der Plus, whose videos I would look at, there’s a guy who has a keen understanding.

[01:05:14] Without being political.I would look him up, Jake Van der Plus and look at him on Bayesian versus frequentist methods.

Frequentism and Bayesianism: A Python-driven Primer

Frequentism and Bayesianism: A Practical Introduction | Pythonic Perambulations

You can get into a lot of this by just looking up the Bayesian frequentist debate.

You can also get to it looking up. Science wars and meaning. Of probability. These all lead you to. The same schism. That I’m addressing here.

Correct me if I’m crazy. In addition to being to worrying about bad science, I’m kind of worried about the bad use of the term science.

And you you alluded to this in some ways, we’ve turned it into some sort of magical black box where it’s synonymous with magic. That’s right. Yep. And then when we when we say we’re when people say follow the science, what they’re really saying is, shut up.

They are rather than let’s let’s follow science. Yeah, we we need to, to get away from the high priestess. High priest notion where you come down and tithe at the altar and everyone else shuts. Up. And you’re exactly right. And I think I. Think I’m on that. Very subject that who do you have to be to say, I think the answer. Is naked. And that involves you know, you’ve got to cause that that comes after the effect. Your math requires that two plus two be five. You know, I mean, just anything that doesn’t fly.

In the face of logic, language or mathematics, science has. To be an extension of that.

It’s a problem. We’ve seen a fascinating phenomenon. I’ll give you one of them. That’s that’s where this theory of an alternative universe. Well, I’ll tell you where it comes from. Every time we. Look at the electron by whatever method, every time you peek, it’s it’s random as to which way it may be spinning. And so it’s going clockwise, no counterclockwise, counterclockwise, clockwise and roughly 5050. And and it’s led some people in that quandary. What if it’s doing both? Well, let me tell you something. It’s not doing both.

The problem is how we’re. Looking at it. And we need to find newer, better. [01:07:21] Methods to examine a thing,

because. It’s not doing both. But what this. Led to is that there are two universes. There’s one where it is spinning that way and one where it isn’t. And we live in both of them and you’ll never know. And oh, it’s just nuts. It’s the wrong way to go. And particle physics. Has been doing this for a long time.

Now and I highly. Recommend a book by. Salena Sabrina Hossenfelder. Anyways, the book is Lost in Math and this is a particle physicist working with. Cern and at the Frankfurt in Germany at their big particle research. Center. She’s a she’s a she’s a big name in particle physics.

- [x] add to reading list

And she says that particle physics is lost and that it has lost its epistemic grounding, that. It is attempting to change the. Standards of scientific. Method to make it non empirical. That criteria of. Beauty and symmetry have. Led us astray, that there hasn’t been a significant discovery since. 1973. We’ve got a whole generation of particle physicists who. Don’t know why they’re in the field, and she says that the CERN Haldon collider has been a colossal waste of money and disappointed everyone involved. Fascinating read. That was that was sent to me by way of Amazon’s A.I.. They know I’m the guy looking at broken science and would like this book and indeed I did.

And she mentions Popper and falsification in there, which. Was fascinating, too. The physicists have long. Known that that wasn’t getting them where they needed to go.

This is a fascinating problem. I do think it’s I do think it’s one. Of the one of the greatest. Problems in the world today. And please. Is there anything that you discovered a long time ago? When you were getting started, the process that you. Rethought based on the science. And now. Do it or try to stay on. I don’t know. This might just. This probably isn’t a good answer your question. But I’m going to share it because. It’s within that vein. And it came up just the other day.

[01:09:37] In working with our special needs. People that we had in the basement of of Santa Cruz at HQ. We had the morbidly obese and the elderly that we were putting time with. I came to see that. What they were in desperate need is, is three periods of activity daily and that to open up the can of whoop ass once a day on someone like that for a level that was consistent with their capacity so that they would psychologically want to and physiologically be able to was diminished enough of a stimulus that it wasn’t adequate to their needs. And so what I really wanted with these people is for them to do something active in the morning, something active a session in the afternoon and something in the evening. And I realized that this is kind of a unification theory, because that’s kind of where the games athletes are to write, you know, three, eight days. And that’s very interesting to me. And I came to see that the one workout of day might be an artifact of the business we’re in and what we do. And the people come to see me, and I didn’t want to go publicly with that, really. I mean, it came up because it came up and I think it’s kind of along those lines. But if I had a if I had a box again and I’ve got a single client right now, I had a I have a guy that was struggling with a long running chronic illness. And I found out from his wife that he’s and he’s a specimen of a man. He’s a great human being. He’s a great dad, and he’s deathly ill and shouldn’t be. And I found out he’s drinking three, six packs of Coca-Cola a day. And so I go, Oh, I got this is mine. And I roll up my sleeves and get to work. And I actually had to pretend. To want to train him. And I do. I did. I am. But because I want to be there enough time to tell him, you got to get off the fucking coke, man, so you don’t waste my time. But I’m also thinking of other things I’d like him to do for a second and a third effort. [01:11:37] And that’s that’s that’s interesting to me. No, there’s not a lot that, you know, this had been in a long dormancy. And then I had. The wonderful, wonderful experience of developing it through work out of the. Day with you. You know, and believe me, there was some wads that went up that was there was one in particular that I couldn’t I couldn’t undo it. And and you learn these things. We got out on a 400 meter track and it was it was ten lunges, 15 by 95 push press. And we were out there like 3 hours. I mean, no one was going to finish and I couldn’t I had Josh Everett and Greg Omundson and I couldn’t make them stop because they weren’t going to stop. And I thought they were going to die and and it was just wrong, wrong, wrong. And it had posted and sounded good, you know, but you really learned the value of of empirically testing things. There’s a lot a lot of people have. Have been the guinea pig. James So would that be your advice or affiliates? Should you be able to learn those lessons? And and they learned to continue to learn those lesson. Without falling victim to consensus science or the monsters chasing them. Yeah. That’s. I don’t think you have any choice. You know, I was. Telling I told the physicians at the at the middle one that that the onus. Is yours to make the what you what you’re taught, what you hear, what’s practice. It’s got to comport with the clinical realities that that first. And foremost, to be scientific, you have to be empirical and to unsee things. So the theory theory fits is to make. A mistake and probably in the end a. Dangerous one. So, yeah, I think that’s exactly what I’m saying. I can quote an answer you gave earlier. You said you don’t know how to unfold medicine, which makes sense. These conversations, the conversations we’re having, what you’re taking on and continuing to take on. Is there is there a goal in mind or is it like, let’s just go for it because we can. [01:13:47] Go for it?I truly meant it when I said I can free any. Man from the tyranny of bad science. I said shitty science and it’s shitty scientists and I and I really believe that can be done. And, and part of what happens is that and we’ve seen this in the people that have worked with us on CrossFit Health with the let’s start with the truth idea by the way.

I’ve taken that let’s start with the truth. It’s let’s let’s get back to that. I think, in fact, you can. Get someone where they are. Skeptical of what they’re hearing. And it might cause you to sit there with your doctor and say, based on what?

And then you might get this study and go, you realize this is an epidemiological study. The data isn’t really data and that it isn’t. It isn’t it isn’t. It wasn’t a measurement derived from an observation, but it’s a very subjective thing and it’s someone’s memory on a. Study. And this isn’t this isn’t how science is done. When you’re. Doing surveys, you’re not. Doing science.

Doc, what else you got? I mean, it’s okay to go there and she might go, Wow, I didn’t ever do that. Yeah. Yeah. You know, I think that’s fair to do. I think you I think I give you that authority.

I want to read to you just something we’d. Put together on on modern medicine. It’s kind of call it a narrative outline.

It’s this is not. Strong expository prose. These there’s the flow, isn’t there?

But what was intended here is that these be the salient facts, each given a reference number, and then expanded in significant detail to create the curriculum.

But I think you’ll see where this is going.

Modern science is source and repository of man’s objective knowledge. That’d be one right.

Modern Science

Modern science is source and repository of man’s objective knowledge. Scientific knowledge is siloed in models. A model maps a fact to a future unrealized fact as a prediction. A fact is a measurement. A measurement is an observation tied to a scale with an expressed error. An observation is a registration of the real world on our senses or sensing equipment.

A model’s validation derives entirely from its predictive power. There are four grades of model ranked by predictive strength and they are conjecture, hypothesis, theory, and law.

A conjecture is an incomplete model or analogy to another domain. A hypothesis is a model based on all data in its specified domain, with no counter examples, and incorporating a novel prediction yet to be validated by facts. A theory is a hypothesis with at least one nontrivial datum. A law is a theory that has received validation in all possible ramifications, and to known levels of accuracy.

Predictive power is evidence of and reason for sciences’ objectivity, the sole source of science’s reliability and the demarcation between science and pseudoscience. Predictive power as determinant of a scientific model’s validity provides the basis for any rational trust of science.

Models are predictions mapping a fact to an unrealized fact where the current fact constitute the premises and the unrealized fact the conclusion of an inductive argument. Induction derives conclusions from premises with probability and not certainty. All scientific knowledge is therefore the fruit of induction validated by predictive power which is a measure of probability.

It is important to note that validation comes independent of method. Models derived from inspiration or perspiration both rank by predictive power alone. It also warrants mentioning that modern science is an extension of logic and therefore must be consistent with the rules of logic, language, and mathematics.

Go on for hours. But science knowledge is siloed and models a model maps effect to a future unrealized fact is a prediction effect is a measurement. A measurement is an observation tied to a scale with an expressed error.

[01:15:49] An observation is a registration on the real world or on our senses or sensing equipment. It’s a registration of the real world on our senses or sensing equipment. The model’s validation derives entirely from its predictive power. There are four grades of model ranked by predictive strength in their conjecture hypothesis theory in law. It conjecture is an. Incomplete model or analogy to another domain. A hypotheses is a model based on all data in its specified domain with no counterexamples and incorporating a novel prediction yet to be validated by facts. A theory is a hypothesis with. At least one non-trivial datum. A law is a theory that has received validation in all possible ramifications and the known levels of accuracy. That last one, me and some of my science and math buddies are ironing out predictive power is evidence of and reason for. Science is. Objectivity. The sole source of science is reliability and the demarcation between science and pseudoscience. Predictive power is determinant of a scientific model’s. Validity, provides the basis for. Any rational trust of science. Models are predictions mapping effect to an unrealized fact where the current. Fact constitutes the premises and the. Unrealized factor. The conclusion of an inductive argument induction derives conclusions from premises with probability and not certainty. All scientific knowledge is therefore the fruit of induction validated by predictive power, which is a measure of probability. It is important to note that validation. Comes independent of method. Models derived from inspiration or perspiration, both ranked by predictive power alone. It also warrants mentioning that models that modern science is an extension of logic and therefore must be consistent with the rules of logic, language and mathematics.I think we covered a lot of that, but that can be driven home over and over and over again. And I mentioned no safe spaces…

…because what I really Liked about that Is that it explains a very elegant And important philosophical concept, important to our our very culture, History, Constitution, and way of life. And yet does so in a way that I could I would have my mother or my children look at it. And it’s not overly simplified to the point of being insulting and yet carries the essence of something simple enough that everyone should know it.[01:18:13] And that’s, that’s what we’re going to strive for here.

And so I think That if we can teach Our children what science is and isn’t — Just maybe we could teach their teachers and maybe journalists and maybe Even some scientists. That would be the hope.

I tell people I’m oddly driven, not so much by goals as I am by process. And so I’m just going to keep doing this fucking thing and nobody can stop me and let’s see what happens.

We will find success with this. I just don’t know how much. I also don’t care so much I didn’t need. There wasn’t I was never a point where I’m like, this is all worth it. If I get 1000 cross fitters or not worthy a thousand and is with 200,000. I was never I’ve never really.

I’ve usually generated better numbers out of that than I might have predicted if I were to try and look into a crystal ball and see how many people can you get to pull their head out of their ass and wipe their eyes clear? I don’t know what that number is. And truthfully, I didn’t do it. You did it. You know.

But I do know this. If you make sense and you gear your thoughts to the smartest person in the room, smartest people in the room, when they catch on, they’re usually remarkably effective, even maybe more effective than I am at distribution of the message. So that’s the underlying thought.

That would be the if I had a hope attachment, it would look like that. You know, my client base came that way. There was no it wasn’t I wasn’t putting flyers under windshield wipers at the mall. Right. I wasn’t doing advertising.

I did a good enough job with you that you couldn’t stop talking about it. And so I’d finally meet your friends. They’d go, He’s driving me nuts. You’re kidding me. Good people go, How do I talk my dad into this? I just nag him until he insists you shut the fuck up. The very thing I would do.

[01:20:10] You see, Dave and I’d get. In an elevator. We’ve had this happen. Someone, what’s crossed? We all look at each other like shit. I quit answering. That. I think I did a philia gathering. I explained this is in Miami. I said when someone asked me, What’s CrossFit is? I asked him, Well, let’s see, what’s today’s? Today’s Friday? What are you doing Monday morning at 10:00? I mean, I soI don’t want to tell you. I want to show you.

And what happens if you’ll allow me to show you? What’ll happen is within a month or two, you’re going to come back telling me and you’ll be a better teller of it than I was or could have been. There’s some things you just you’re not going to describe CrossFit or chocolate or sex or a good movie. You just. You just got. To have the experience and then you become the expert. It may be just like that with this to where the light comes on.

I know we’ve seen it within our circle of friends … you should see how sick everyone is in my house hearing about broken science.

One last question. Sure. A statement. I think I like to challenge the science of identifying corruption, but also maybe pinning on this whole thing. The idea of identifying. Allison that speaks by. And. I think. Just the overall picture of how that can. Sort of struck. Me where it’s a great idea and you’re perfectly correct.

And in fact, it’s in the plans and what

we’re doing is working on a brokenscience.org sandbox website.

And amongst the things that would be on it would be the greatest hits of scientific misconduct.

I mean, there are some doozies. I just incredible gall. Like the guy that painted on the mice to match the patterns of the mice that died that he’d saved with his treatments. And, you know, I mean, can you imagine with your PhD, M.D., and a brush out at night painting and white mice trying to hold them still to get this study published? I can’t even conceive of that guy’s the only person is going to jail for scientific misconduct.

Inquiry at Cancer Center Finds Fraud in Research [painted mice]

[01:22:20] And there’s. Fallacies. It’s just great. I learned to this. I would I would suggest that anyone that if you really got a penchant for this kind of stuff and here again, I’m just kind. Of outlining my path.My father recommended Dave’s. Stove to me, and it took. Me five hard years to get through his work.