-

link to PDF

- Kevin Knuth

Summary

Kevin Knuth's presentation on the history of probability theory begins with Laplace's famous quote, that “probability theory is basically just common sense reduced to calculation.” Traditional logic only concerns what is absolutely true or false. The scientist James Clerk Maxwell advocated that probability concerns the magnitude of truth existing in between the extremes of true and false.

Probability is commonly presented as P(x|y), which translates to the probability x is true given y is true.

Knuth introduces John Maynard Keynes view that probability should be interpreted as a degree of truth or the degree of belief that a rational person would have in a hypothesis. It is in a sense subjective, but not due to the influence of “man's caprice.” A third view of probability explains it as a “degree of implication.” Knuth prefers this interpretation.

Three foundational systems of probability theory are explained:

Bruno De Finetti's method calculated probabilities as a degree of belief quantified by how much a rational person would bet on a predicted outcome. The emphasis is on consistency. Knuth laments this is the most commonly presented foundation.

Anton Kolmogorov created foundational axioms of probability theory that are especially popular among modern Bayesians. With these axioms, probabilities can be calculated algebraically.

Richard Cox generalized boolean logic to degrees of rational belief. His axioms enabled calculation of probabilities of multiple events and included the relation between propositions and their contradictories.

De Finetti and Kolmogorov created systems that were thorough and self-contained. Cox's creation was right enough to be useful, interesting enough to be compelling, and left enough room for future improvement. Much of this work was done by E.T. Jaynes.

Knuth then presents a modern perspective on probability. It primarily concerns determining what types of knowledge can be described by equations: Laws of the universe and constraints by underlying order are prime examples.

He introduces lattices as a tool to understand probability. These are partially ordered sets of elements with a least upper bound and a greatest lower bound (conceptually a ceiling and a floor). Quantification and logical operations can be done on lattices while preserving the order of its structure.

Knuth demonstrates how the associativity of logical operations (instances where the order of logical operations doesn't matter) can be used to derive the foundations of measure theory. He shows how the additivity of logical operations works and results in the ubiquitous sum rule.

Inference is also demonstrated, starting with an example of what we can know about the contents of a grocery basket. We learn that higher-level knowledge can be inferred from the structure of a lattice. He even derives Bayes theorem from mathematical operations performed on a lattice. Logic and mathematics can be used to calculate probabilities within a lattice in applications as varied as grocery baskets and quantum physics.

Knuth concludes that the foundations of probability act as a broad base from which novel theories can be made in seemingly disparate domains.

Probability is about figuring out how likely something is to happen. It's not just true or false. There's a whole range in between. Experts have debated the meaning of probability and some think it means how much an idea is true or how strongly a person should believe an idea. The author of this presentation, Kevin Kneuth, believes probability should be how strongly an idea is implied.

A probability is written like this: P(x|y). It means "How likely is x if y is true?"

Three of the greatest experts in probability came up with different ideas for how it should work:

Bruno de Finetti explained probability by how much you'd bet on something happening. Consistency is key.

Anton Kolmogorov created math rules to calculate probabilities, like in algebra.

Richard Cox used logic and math to decide how likely an idea is to be true.

Their work was great and people improved it even more later on.

A modern view of probability looks at what types of ideas can be described by math equations. The rules of nature and order are major examples.

One tool for doing probability math is a lattice - ordered sets with upper and lower limits. Their structure allows you to come up with new ideas about the items in a lattice. You can also do math operations on them under a few basic rules like:

Order of some operations doesn't matter

Adding works as expected

You can create higher level knowledge (called an inference)

These rules let you calculate probabilities. Even Bayes theorem can be derived this way. The principles work broadly, from grocery lists to quantum physics.

In summary, the foundations of probability theory provide a basis for new theories in many areas. Rules of math and logic can all help to figure out probabilities.

--------- Original ---------

“In deriving the laws of probability from more fundamental ideas, one has to engage with what ‘probability’ means. - Anthony J.M. Garrett, “Whence the Laws of Probability”, MaxEnt 1997 This is a notoriously contentious issue; fortunately, if you disagree with the definition that is proposed, there will be a get-out that allows other definitions to be preserved.”Probability theory is all about calculating how likely something is to happen. In the past, logic only dealt with what is absolutely true or false. But scientists realized there's a whole range of possibilities in between those extremes.

Probability is often written as P(x|y). This means "the probability that x is true, assuming y is true." There are a few ways to think about what probability really means:

The degree that an idea is true.

How strongly a rational person should believe an idea.

How strongly an idea is implied to be true or false.

The writer of this presentation, Kevin Knuth, prefers the third interpretation. Next, he introduces three key founders who developed systems of probability theory:

Bruno de Finetti focused on how much a person would bet on a certain outcome being true. Consistency was key.

Anton Kolmogorov created mathematical rules to calculate probabilities.

Richard Cox generalized logic to handle degrees of belief.

Their work was critical, but still left room for improvement. E.T. Jaynes and others built on their ideas.

A modern view looks at what types of knowledge can be described by probability equations. The rules of nature and order in the world are prime examples.

One tool used to understand probabilities is called a lattice - ordered sets with upper and lower limits. You can do math and logical operations on them in a way that preserves their order. This leads to basic rules of probability:

Associativity - order of some logical operations doesn't matter.

Additivity - sums work as expected.

Inference - higher level knowledge can be derived from the structure of a lattice.

These rules enable calculating probabilities. Even Bayes theorem can be derived from these rules. The principles apply broadly, from grocery lists to quantum physics.

In summary, the foundations of probability theory act as a foundation for new theories in many fields. Consistency, rules of math and logic, and inference all contribute to calculating the likelihood of ideas.

--------- Original ---------

“In deriving the laws of probability from more fundamental ideas, one has to engage with what ‘probability’ means. - Anthony J.M. Garrett, “Whence the Laws of Probability”, MaxEnt 1997 This is a notoriously contentious issue; fortunately, if you disagree with the definition that is proposed, there will be a get-out that allows other definitions to be preserved.”Let's start with the truth!

Support the Broken Science Initiative.

Subscribe today →

recent posts

Expanding Horizons: Physical and Mental Rehabilitation for Juveniles in Ohio

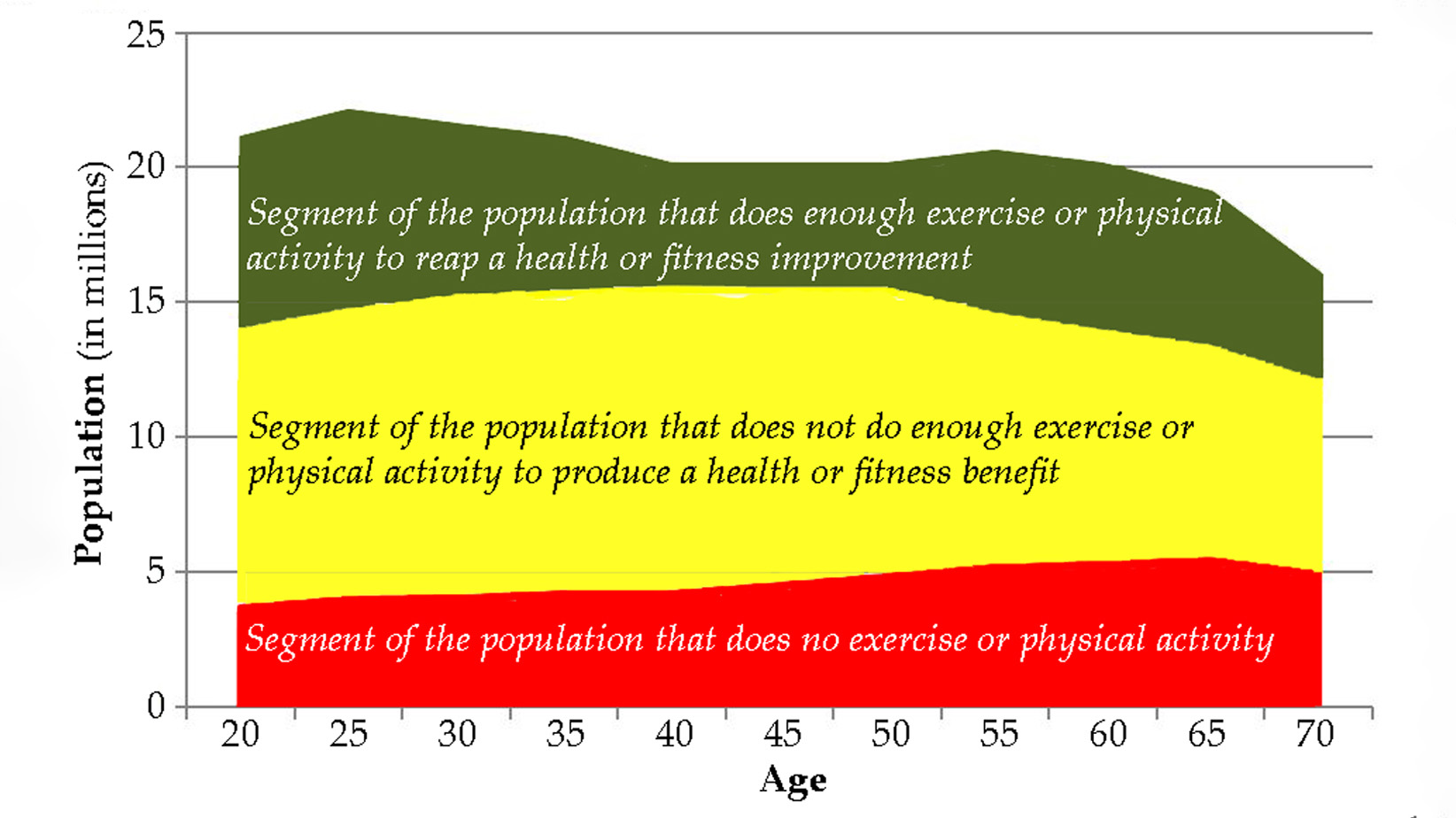

Maintaining quality of life and preventing pain as we age.